The world of computer graphics is a fascinating one, and at the heart of it all is the Graphics Processing Unit (GPU). A GPU architect is the mastermind behind the design and development of these powerful graphics cards. In this article, we will take a deep dive into the world of GPU architecture and explore the many roles and responsibilities of a GPU architect. From concept to creation, we will uncover the intricate details of what goes into designing a graphics card and the challenges that a GPU architect faces along the way. So, buckle up and get ready to explore the exciting world of GPU architecture!

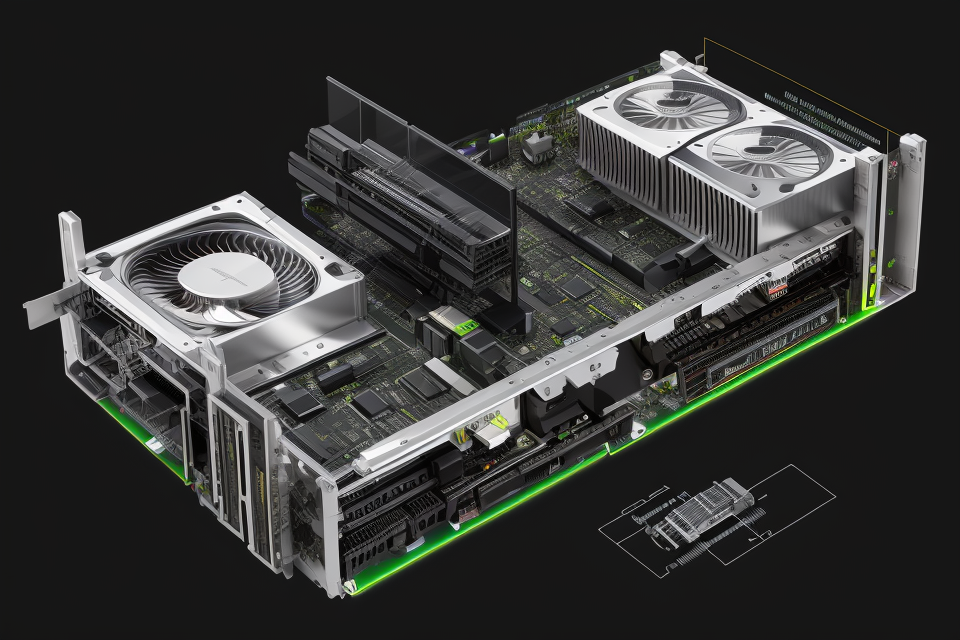

Understanding Graphics Card Architecture

What is a Graphics Processing Unit (GPU)?

A Graphics Processing Unit (GPU) is a specialized type of processor designed specifically for handling the complex calculations required in rendering images and animations for graphics-intensive applications such as video games, movies, and architectural visualizations. Unlike a Central Processing Unit (CPU), which is designed for general-purpose computing tasks, a GPU is optimized for parallel processing, which allows it to perform multiple calculations simultaneously.

The primary function of a GPU is to execute the instructions of a computer graphics software, which converts the software’s description of an image into a signal that is sent to a display device. This involves transforming geometric objects, applying colors and textures, and calculating lighting effects.

A GPU is composed of many small processing cores, called Streaming Multiprocessors (SMs), which work together to perform the calculations required to render images. Each SM is capable of executing multiple threads simultaneously, allowing the GPU to perform millions of calculations per second.

GPUs are also designed to be highly scalable, meaning that they can be easily integrated into a wide range of computing devices, from desktop computers to mobile phones. This scalability is achieved through the use of different types of GPUs, such as Integrated GPUs (iGPUs) and Discrete GPUs (dGPUs), which can be configured to meet the specific needs of different applications.

Overall, the primary function of a GPU is to offload the processing of complex graphics calculations from the CPU, allowing the CPU to focus on other tasks, such as running the operating system and applications. By leveraging the power of parallel processing, GPUs are able to render images and animations at high speeds, making them an essential component of modern computing devices.

The Evolution of GPUs: From 2D to 3D Acceleration

The evolution of GPUs (Graphics Processing Units) has been a fascinating journey, from their initial introduction as simple 2D rendering devices to their current role as sophisticated 3D acceleration engines. In this section, we will delve into the historical milestones that have shaped the development of GPUs, highlighting the key technological advancements that have led to their widespread adoption in various industries.

Early 2D Graphics Accelerators

The first generation of GPUs, introduced in the late 1980s, were primarily designed for 2D graphics rendering. These early accelerators were primarily used in professional settings, such as in the fields of computer-aided design (CAD) and digital publishing, where they significantly improved the speed and quality of raster graphics rendering.

The Emergence of 3D Acceleration

The 1990s saw the rise of 3D graphics and the demand for more sophisticated GPUs capable of handling complex 3D scenes. The introduction of 3D graphics APIs, such as 3dfx’s Glide and NVIDIA’s Riva 128, marked the beginning of a new era in GPU development. These early 3D accelerators relied on various techniques, such as rasterization and triangle setup, to render 3D graphics on a 2D display.

Transition to Programmable Pipelines

The early 2000s brought about a significant shift in GPU architecture with the introduction of programmable pipelines. This innovation allowed GPUs to be more flexible and adaptable to different types of applications, leading to a broader range of use cases. Programmable pipelines enabled developers to write shaders, which are small programs that run on the GPU, to optimize the rendering process for specific applications.

Shader Model and Unified Graphics

The Shader Model, introduced by Microsoft in 2002, further advanced the capabilities of GPUs by standardizing the programming interface for vertex and pixel shaders. This standardization facilitated the development of cross-platform games and applications, enabling a more unified graphics pipeline.

The concept of unified graphics, pioneered by NVIDIA with its CUDA (Compute Unified Device Architecture) framework, marked another milestone in GPU evolution. CUDA enabled the use of GPUs for general-purpose computing, opening up new possibilities for applications beyond traditional graphics rendering. This breakthrough paved the way for the widespread adoption of GPUs in fields such as scientific simulations, deep learning, and cryptocurrency mining.

The Age of Ray Tracing and Realism

More recently, the development of real-time ray tracing has become a focus of GPU innovation. Ray tracing is a technique used to simulate the behavior of light in a scene, providing more accurate and visually stunning results compared to traditional rasterization methods. Companies like NVIDIA and AMD have been actively working on implementing hardware-accelerated ray tracing on their GPUs, which has enabled the creation of highly realistic and immersive virtual environments in video games and other applications.

In conclusion, the evolution of GPUs from simple 2D rendering devices to sophisticated 3D acceleration engines has been driven by the constant pursuit of improved performance and capabilities. The ongoing advancements in GPU technology have enabled their widespread adoption across various industries, from gaming and entertainment to scientific simulations and artificial intelligence.

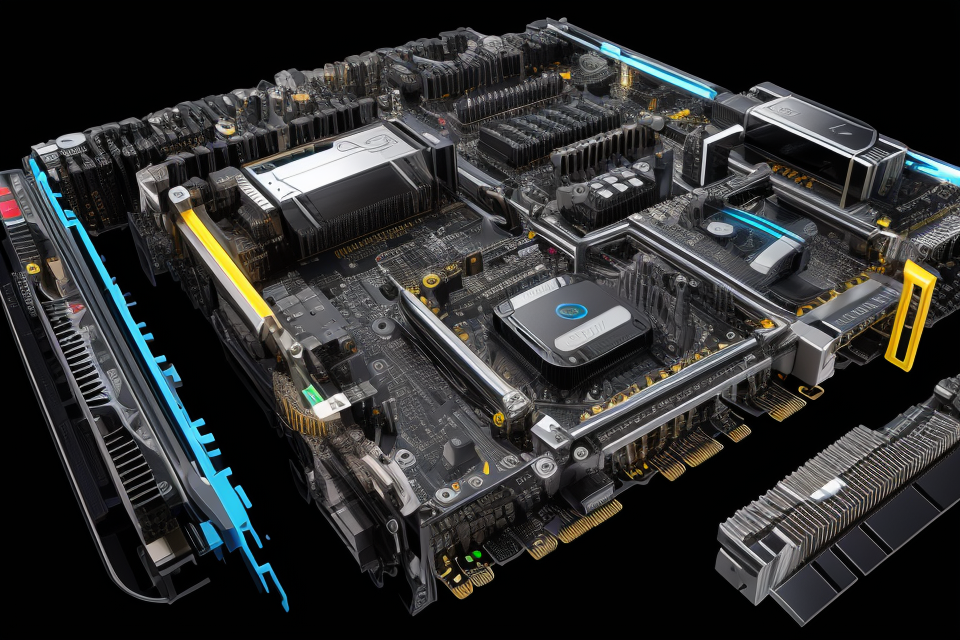

GPU Architecture: Pipelining, Caches, and Parallel Processing

GPU architecture plays a crucial role in determining the performance and efficiency of graphics cards. The three key components of GPU architecture are pipelining, caches, and parallel processing.

Pipelining is a technique used in GPU architecture to improve the performance of graphics cards. It involves breaking down complex tasks into smaller, simpler tasks that can be processed in parallel. This allows for faster processing times and increased efficiency.

Caches are another important component of GPU architecture. They are small, high-speed memory units that store frequently used data and instructions. By storing this data in caches, graphics cards can access it more quickly, reducing the time it takes to complete tasks.

Parallel processing is a key feature of GPU architecture that allows multiple tasks to be processed simultaneously. This is achieved by dividing complex tasks into smaller, simpler tasks that can be processed in parallel. By processing multiple tasks at the same time, graphics cards can significantly increase their performance and efficiency.

Overall, the combination of pipelining, caches, and parallel processing allows GPU architects to design graphics cards that are capable of processing complex tasks quickly and efficiently.

The Role of a GPU Architect in Graphics Card Development

A GPU architect plays a crucial role in the development of graphics cards. The role of a GPU architect can be broken down into several key responsibilities:

- Designing the Graphics Processing Unit (GPU): The GPU architect is responsible for designing the GPU, which is the core component of a graphics card. The GPU is responsible for rendering images and processing complex calculations. The GPU architect must ensure that the GPU is designed to be efficient, powerful, and capable of handling the demands of modern games and applications.

- Optimizing Performance: The GPU architect must optimize the performance of the GPU. This involves designing the GPU to be as efficient as possible, minimizing power consumption, and reducing heat output. The GPU architect must also optimize the software that controls the GPU to ensure that it runs at maximum efficiency.

- Collaborating with Other Teams: The GPU architect must collaborate with other teams, such as the hardware engineering team, to ensure that the GPU is compatible with other components of the graphics card. The GPU architect must also work closely with software developers to ensure that the software running on the graphics card is optimized for the GPU.

- Staying Current with Technology: The GPU architect must stay current with the latest technology and trends in the field of graphics processing. This involves staying up-to-date with advancements in materials science, computer engineering, and software development. The GPU architect must also be aware of the competition and ensure that the graphics card is competitive in terms of performance and price.

Overall, the role of a GPU architect is complex and multifaceted. The GPU architect must have a deep understanding of graphics processing, computer engineering, and software development. They must be able to design and optimize the GPU, collaborate with other teams, and stay current with the latest technology and trends in the field.

GPU Architecture Design Principles

Balancing Performance and Power Consumption

When designing GPU architecture, a critical consideration is balancing performance and power consumption. A GPU architect must strike a balance between increasing the number of cores, increasing clock speeds, and reducing power consumption. The challenge lies in maximizing performance while minimizing power consumption, as a higher performance typically requires more power, which can lead to higher temperatures and reduced lifespan.

To achieve this balance, GPU architects use various techniques, such as reducing the number of transistors, optimizing clock speeds, and improving memory efficiency. For example, reducing the number of transistors can help reduce power consumption, while optimizing clock speeds can improve performance without significantly increasing power consumption. Additionally, improving memory efficiency can help reduce power consumption by reducing the amount of energy needed to access and store data.

Another technique used by GPU architects to balance performance and power consumption is dynamic voltage and frequency scaling (DVFS). DVFS allows the GPU to adjust its voltage and frequency in real-time based on the workload, reducing power consumption when the workload is light and increasing power consumption when the workload is heavy. This technique can help optimize performance while keeping power consumption within acceptable limits.

In summary, balancing performance and power consumption is a critical consideration for GPU architects when designing graphics card architecture. They use various techniques such as reducing the number of transistors, optimizing clock speeds, improving memory efficiency, and dynamic voltage and frequency scaling to achieve this balance.

Optimizing Memory Bandwidth and Efficiency

GPU architects are responsible for designing and optimizing the memory bandwidth and efficiency of graphics cards. This involves a deep understanding of the underlying hardware and software systems, as well as the ability to balance performance and power consumption.

Maximizing Memory Bandwidth

One of the key challenges in GPU architecture is maximizing memory bandwidth. This refers to the rate at which data can be transferred between the GPU and memory. The higher the memory bandwidth, the faster the GPU can access the data it needs to render images and perform other tasks.

To maximize memory bandwidth, GPU architects must carefully design the memory hierarchy of the graphics card. This includes the size and speed of the various memory caches, as well as the bandwidth of the main memory.

Optimizing Memory Efficiency

In addition to maximizing memory bandwidth, GPU architects must also optimize memory efficiency. This involves minimizing the amount of memory required to perform a given task, as well as reducing the number of memory accesses required.

One approach to optimizing memory efficiency is to use compressed data formats. By compressing data before storing it in memory, GPU architects can reduce the amount of memory required to store the data. This can lead to significant performance improvements, particularly in applications that require large amounts of data to be processed.

Another approach to optimizing memory efficiency is to use techniques such as hierarchical memory access and speculative loading. These techniques allow the GPU to access memory more efficiently, reducing the number of memory accesses required and improving overall performance.

Overall, optimizing memory bandwidth and efficiency is a critical aspect of GPU architecture design. By carefully balancing these factors, GPU architects can create graphics cards that deliver high performance while minimizing power consumption and memory usage.

Ensuring Compatibility with a Wide Range of Applications

When designing GPU architecture, one of the primary objectives is to ensure compatibility with a wide range of applications. This requires the GPU architect to carefully consider the various programming models and APIs that developers may use to access the GPU’s computational power. Here are some of the key factors that a GPU architect needs to consider when designing for compatibility with a wide range of applications:

- Hardware abstraction: To ensure compatibility, the GPU architect must design the hardware in a way that abstracts the underlying hardware details from the application programmer. This allows developers to write their code using high-level programming models and APIs, without needing to worry about the low-level details of the hardware.

- Efficient utilization of GPU resources: The GPU architect must design the hardware in a way that allows for efficient utilization of GPU resources, such as memory and compute units. This requires careful balancing of the available resources to ensure that they can be used effectively by a wide range of applications.

- Performance optimization: The GPU architect must also consider performance optimization when designing for compatibility with a wide range of applications. This requires optimizing the hardware to ensure that it can deliver high performance across a wide range of workloads, from simple graphics rendering to complex scientific simulations.

- Flexibility: To ensure compatibility with a wide range of applications, the GPU architect must design the hardware in a way that is flexible and adaptable to different workloads. This requires careful consideration of the trade-offs between performance, power consumption, and cost, as well as the ability to adjust the hardware configuration to meet the needs of different applications.

Overall, ensuring compatibility with a wide range of applications is a critical design principle for GPU architects. By designing hardware that is abstracted, efficient, optimized, and flexible, GPU architects can create hardware that is accessible to a wide range of developers and can support a broad range of applications.

Addressing the Challenges of Real-Time Ray Tracing

The process of creating a realistic lighting and shadowing effects in computer graphics is known as ray tracing. Ray tracing simulates the way light behaves in the real world, taking into account the materials of objects, their reflective and refractive properties, and the way light interacts with them. Real-time ray tracing is the ability to render images in real-time while simulating these effects. This technology has been a long-standing goal for the gaming industry, as it has the potential to significantly enhance the realism of computer-generated graphics. However, achieving real-time ray tracing presents several challenges for GPU architects.

One of the main challenges is the computational complexity of ray tracing. Each pixel in an image can be thought of as a light source, and the light from each pixel must be traced as it bounces off surfaces and interacts with other light sources. This means that the number of calculations required to render a single image can be extremely high. Additionally, as the resolution of the image increases, so does the number of calculations required, making it even more challenging to achieve real-time rendering.

Another challenge is the memory bandwidth of the GPU. The amount of data that can be transferred between the GPU and memory is limited, and real-time ray tracing requires a lot of data to be transferred quickly. If the memory bandwidth is not sufficient, the GPU will not be able to keep up with the demands of real-time rendering, leading to performance issues.

Finally, real-time ray tracing requires a lot of power to run. This can be a problem for gaming laptops and other devices that have limited power supplies. GPU architects must design GPUs that are both powerful enough to handle real-time ray tracing and efficient enough to avoid overheating and other power-related issues.

In conclusion, real-time ray tracing presents several challenges for GPU architects. These challenges include computational complexity, memory bandwidth, and power consumption. However, despite these challenges, real-time ray tracing is becoming increasingly important in the gaming industry, and GPU architects are working hard to overcome these challenges and meet the demands of this technology.

GPU Architects: Tools and Techniques

Modeling and Simulation Software

As a GPU architect, one of the essential tools in your arsenal is modeling and simulation software. These tools allow you to create and test different graphics card designs without the need for physical hardware. They enable you to evaluate various design choices, identify potential issues, and optimize performance. In this section, we will discuss some of the most commonly used modeling and simulation software in the GPU design process.

Simulation Software

- Synopsys IC Compiler: This software is used for RTL (register-transfer level) design and implementation. It enables GPU architects to create efficient, high-quality RTL code for their designs.

- Cadence Virtuoso: This tool is used for physical design, allowing architects to lay out the components of their GPU design on the chip. It helps in creating a physical representation of the design, ensuring it meets manufacturing requirements.

- Xilinx Vivado: This software is used for FPGA (field-programmable gate array) design. GPU architects can use it to create FPGA prototypes of their designs, which can then be tested and optimized before moving to ASIC (application-specific integrated circuit) design.

- Cadence Palladium: This tool is a verification platform that allows GPU architects to test their designs for correctness and performance. It includes a range of verification tools, such as simulators, formal verification tools, and emulation systems.

- Mentor Graphics Questa: This software is a simulation platform for ASIC and FPGA designs. It includes a range of advanced simulation tools, such as power analysis, timing analysis, and debug tools.

Physics-Based Simulation Software

- GPU-G: This is a physics-based simulation software developed by NVIDIA for GPU architects. It allows architects to simulate and evaluate different GPU designs based on real-world physics principles.

- AMD ROCm: This is an open-source platform for HPC (high-performance computing) and machine learning applications. It includes a range of tools for GPU design and optimization, including physics-based simulation software.

- OpenCL: This is an open standard for heterogeneous computing, which allows GPU architects to write code that can run on a variety of hardware platforms. It includes a range of physics-based simulation tools that can be used for GPU design and optimization.

In conclusion, modeling and simulation software play a crucial role in the GPU design process. These tools enable GPU architects to create, test, and optimize their designs without the need for physical hardware. They provide a virtual environment for evaluating different design choices, identifying potential issues, and optimizing performance. The use of these tools helps GPU architects to create more efficient, reliable, and cost-effective graphics cards.

High-Performance Computing Clusters

GPU architects utilize high-performance computing clusters to develop and test their designs. These clusters consist of interconnected computers that work together to solve complex problems and perform computations at a much faster rate than a single computer. The architecture of a graphics card relies heavily on the computational power provided by these clusters, as they allow designers to simulate and test their designs in a realistic environment.

The process of designing a graphics card begins with the creation of a concept, which is then simulated using high-performance computing clusters. This simulation process helps designers to identify potential issues and make adjustments to the design before it is physically manufactured. By utilizing these clusters, GPU architects can optimize their designs for maximum performance and efficiency, resulting in graphics cards that can handle the most demanding applications and games.

Additionally, high-performance computing clusters are also used to conduct research and development in the field of graphics card architecture. These clusters enable designers to run extensive tests and simulations, allowing them to push the boundaries of what is possible with graphics card technology. By utilizing these clusters, GPU architects can stay at the forefront of the industry and continue to develop cutting-edge graphics card designs that meet the needs of consumers and businesses alike.

Collaboration with Hardware Engineers and Other Stakeholders

As a GPU architect, collaboration with hardware engineers and other stakeholders is an essential aspect of the job. The role of a GPU architect is not only to design and develop graphics card architecture but also to work closely with other teams to ensure that the final product meets the desired specifications and performance metrics.

The GPU architect works closely with hardware engineers to ensure that the graphics card architecture is compatible with the hardware components and can be manufactured within the desired cost and time constraints. They also collaborate with software engineers to ensure that the graphics card architecture is compatible with the software applications and can meet the desired performance metrics.

Moreover, the GPU architect also works closely with other stakeholders such as product managers, marketing teams, and sales teams to ensure that the final product meets the desired specifications and can be marketed effectively. This requires a deep understanding of the market trends, customer needs, and competitive landscape, which the GPU architect must keep abreast of to ensure that the final product is competitive and meets the customer needs.

Overall, collaboration with hardware engineers and other stakeholders is a critical aspect of the job of a GPU architect, and it requires strong communication, collaboration, and project management skills to ensure that the final product meets the desired specifications and performance metrics.

The Future of GPU Architecture

Advancements in Machine Learning and Artificial Intelligence

GPU architects play a crucial role in the development of cutting-edge graphics cards that can handle the increasing demands of machine learning and artificial intelligence (AI) applications. As these fields continue to advance, GPU architects must stay ahead of the curve to ensure that their designs are optimized for the latest technologies.

One of the most exciting areas of development in machine learning and AI is the use of deep neural networks, which are designed to mimic the structure and function of the human brain. These networks require massive amounts of data processing power, making GPUs an essential tool for researchers and developers.

To meet the demands of deep learning, GPU architects are focused on developing architectures that can support the complex computations required by these networks. This includes designing chips with specialized circuits that can perform matrix multiplications and other calculations essential to deep learning algorithms.

In addition to improving the performance of GPUs, architects are also working to make them more energy-efficient. As AI and machine learning applications become more widespread, the amount of energy consumed by data centers is expected to increase significantly. To address this issue, GPU architects are developing new designs that can reduce power consumption while maintaining high levels of performance.

Another area of focus for GPU architects is the development of specialized hardware for AI applications. For example, some researchers are exploring the use of specialized chips designed specifically for AI tasks, such as image recognition or natural language processing. These chips can be optimized for specific workloads, providing better performance and efficiency than general-purpose GPUs.

Overall, the future of GPU architecture is closely tied to the advancement of machine learning and AI. As these fields continue to evolve, GPU architects will play a critical role in developing the hardware necessary to support them.

Emerging Technologies: Neural Processing Units (NPUs) and Vector Processors

The world of graphics card architecture is constantly evolving, and new technologies are emerging that are poised to change the way we think about graphics processing. Two such technologies that are gaining attention are Neural Processing Units (NPUs) and Vector Processors.

Neural Processing Units (NPUs)

Neural Processing Units (NPUs) are specialized processors designed specifically for artificial intelligence (AI) and machine learning (ML) workloads. They are optimized to accelerate deep learning algorithms, which are used in a wide range of applications, from image and speech recognition to natural language processing.

NPUs are designed to offload the computational workload from the CPU, allowing for faster and more efficient training and inference of neural networks. They are typically designed with a large number of small cores, which can operate in parallel to accelerate the computation of large matrices and tensors.

One of the key benefits of NPUs is their ability to perform matrix operations and convolutions at high speeds, which are critical for deep learning algorithms. Additionally, NPUs are designed to be highly efficient, with low power consumption and high throughput, making them ideal for mobile and edge computing applications.

Vector Processors

Vector processors are another emerging technology that is gaining attention in the world of graphics card architecture. Unlike NPUs, which are designed specifically for AI and ML workloads, vector processors are designed to accelerate general-purpose computing workloads.

Vector processors are optimized to perform vector operations, which are used in a wide range of applications, from scientific simulations to computer-aided design (CAD) applications. They are designed to operate on large data sets, and are particularly well-suited for applications that require high-performance computing.

One of the key benefits of vector processors is their ability to perform complex mathematical operations at high speeds, with low power consumption. They are designed to operate in parallel, with multiple processing cores working together to accelerate computation.

Overall, NPUs and vector processors are two emerging technologies that are poised to change the way we think about graphics processing. As these technologies continue to evolve, they will likely play an increasingly important role in a wide range of applications, from AI and ML to scientific simulations and high-performance computing.

Adapting to the Needs of Next-Generation Applications

The future of GPU architecture is driven by the ever-evolving demands of next-generation applications. As technology advances, the requirements for processing power, memory capacity, and efficiency continue to grow. To meet these demands, GPU architects must design and develop innovative solutions that can effectively adapt to the needs of emerging applications.

One key aspect of adapting to the needs of next-generation applications is improving energy efficiency. As data centers become more widespread and the demand for computing power increases, the need for energy-efficient GPUs becomes more critical. GPU architects must design chips that can perform complex calculations while consuming minimal power, which is essential for reducing carbon emissions and operational costs.

Another critical aspect of adapting to the needs of next-generation applications is improving the memory subsystem. As applications become more data-intensive, the need for high-bandwidth memory solutions increases. GPU architects must design memory architectures that can support large datasets and high-speed data transfers, enabling faster and more efficient processing.

In addition to improving energy efficiency and memory subsystems, GPU architects must also focus on enhancing the overall performance of GPUs. This includes optimizing the architecture for specific workloads, such as deep learning, computer vision, and simulations. By developing specialized GPUs tailored to specific applications, GPU architects can provide more efficient and cost-effective solutions for a wide range of industries.

Overall, adapting to the needs of next-generation applications is a critical aspect of the future of GPU architecture. By designing GPUs that are energy-efficient, high-performance, and capable of handling large datasets, GPU architects can help drive the development of emerging technologies and support the growth of data-intensive industries.

Addressing Sustainability and Energy Efficiency Concerns

As the demand for more powerful and energy-efficient graphics cards continues to rise, GPU architects are facing the challenge of creating innovative solutions that address sustainability and energy efficiency concerns.

The Importance of Energy Efficiency in GPU Design

Energy efficiency is a critical factor in GPU design, as it directly impacts the overall performance and sustainability of the graphics card. A GPU architect must consider the power consumption of the card, as well as the thermal efficiency of the design, to ensure that it operates at peak performance while minimizing energy waste.

The Role of Sustainable Materials in GPU Design

Sustainability is also an important consideration in GPU design. GPU architects must carefully select materials that are environmentally friendly and can be sourced responsibly. This includes using materials that are recyclable or biodegradable, as well as minimizing the use of hazardous substances that could harm the environment.

Innovative Solutions for Energy Efficiency and Sustainability

GPU architects are exploring innovative solutions to address sustainability and energy efficiency concerns. This includes the development of new materials and manufacturing processes that reduce energy consumption and waste, as well as the integration of renewable energy sources such as solar panels and wind turbines into the design of graphics cards.

Additionally, GPU architects are exploring the use of AI and machine learning algorithms to optimize the performance of graphics cards, reducing the overall energy consumption of the system. This can lead to significant energy savings, making graphics cards more sustainable and environmentally friendly.

In conclusion, addressing sustainability and energy efficiency concerns is a critical aspect of GPU architecture. GPU architects must consider the environmental impact of their designs and explore innovative solutions to reduce energy consumption and waste. By doing so, they can create graphics cards that are not only powerful but also sustainable and environmentally friendly.

FAQs

1. What is a GPU architect?

A GPU architect is a specialized engineer who designs and develops the architecture of graphics processing units (GPUs). They are responsible for creating the hardware and software specifications that govern how a GPU functions, including its performance, power efficiency, and compatibility with other components.

2. What does a GPU architect do on a daily basis?

A GPU architect’s daily tasks can vary widely depending on the stage of the development process they are working on. They may spend time designing and simulating new GPU architectures using computer-aided design (CAD) software, testing and validating prototypes, analyzing and optimizing performance metrics, or collaborating with other engineers and stakeholders to ensure the product meets its intended specifications.

3. What skills do I need to become a GPU architect?

To become a GPU architect, you will typically need a strong background in computer engineering, electrical engineering, or a related field. You should also have a deep understanding of computer architecture, hardware design principles, and programming languages such as C++ and assembly. Additionally, excellent problem-solving skills, attention to detail, and the ability to work collaboratively with others are essential for success in this role.

4. What is the difference between a GPU architect and a CPU architect?

A CPU architect designs and develops the architecture of central processing units (CPUs), which are the primary processing components of a computer. CPU architects focus on optimizing performance and efficiency for general-purpose computing tasks, such as running software applications or executing system commands. In contrast, a GPU architect focuses on optimizing performance and efficiency for graphics rendering and other parallel processing tasks that can be performed simultaneously on multiple processing cores.

5. What kind of companies hire GPU architects?

GPU architects are typically employed by companies that manufacture or develop graphics processing units, such as NVIDIA, AMD, and Intel. They may also work for game development studios, animation companies, or other businesses that rely heavily on graphics rendering and parallel processing. Additionally, research institutions and universities may employ GPU architects to work on cutting-edge projects and advance the state of the art in GPU technology.