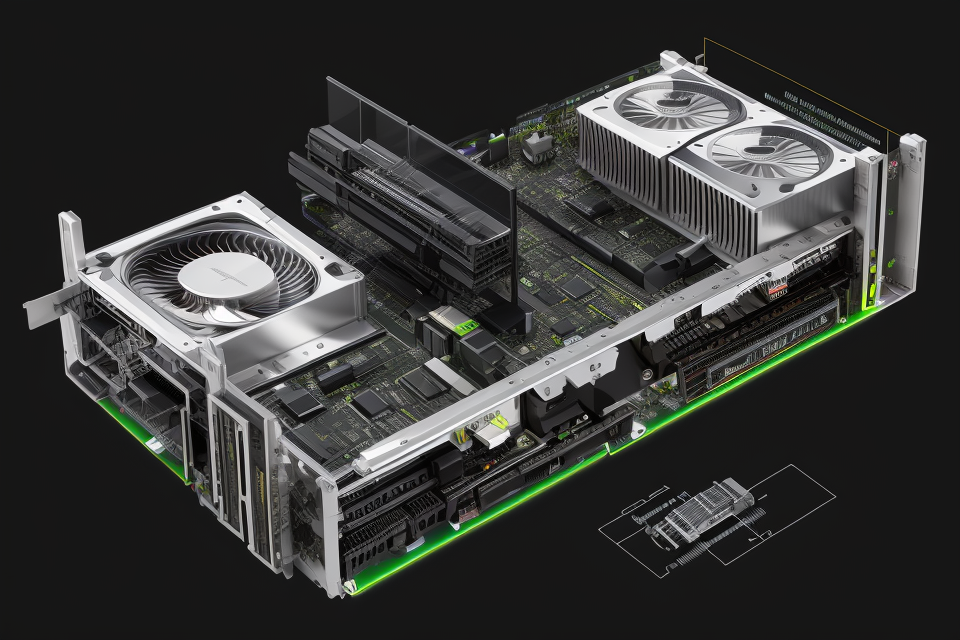

GPUs or Graphics Processing Units have become an integral part of modern computing, delivering powerful graphics and high-performance computing capabilities. However, while many are familiar with the benefits of GPUs, few understand the architectural design that makes them so efficient. This article will delve into the intricacies of GPU architecture, exploring how it differs from CPU architecture and why it matters. From parallel processing to memory hierarchies, we’ll explore the key components that make GPUs tick and why they are an essential tool for modern computing. So, let’s dive in and discover the secrets behind the power of GPUs.

What is GPU Architecture?

Overview of GPU Architecture

GPU architecture refers to the design and structure of the hardware and software components that make up a Graphics Processing Unit (GPU). A GPU is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device.

GPU architecture is based on the concept of parallel processing, which allows for the execution of multiple tasks simultaneously. This is achieved through the use of a large number of processing cores, which work together to perform complex calculations and render images.

The architecture of a GPU typically includes a number of different components, including:

- Rendering Pipeline: The rendering pipeline is the core of the GPU architecture, responsible for transforming and manipulating the data that is passed through it. It is composed of a series of stages, each of which performs a specific task in the process of rendering an image.

- Memory Hierarchy: The memory hierarchy refers to the different types of memory that are used in the GPU architecture, and how they are organized and accessed. This includes the level of cache memory, which is used to store frequently accessed data, as well as the main memory, which is used to store the data that is being processed.

- Interconnect Networks: Interconnect networks are the high-speed connections that allow the different components of the GPU to communicate with each other. They are responsible for transferring data between the processing cores, memory, and other components.

Overall, the architecture of a GPU is designed to be highly optimized for the specific task of rendering images and video. It is built to handle large amounts of data quickly and efficiently, and to provide the processing power needed to handle complex graphics operations.

Differences between CPU and GPU Architecture

The differences between CPU and GPU architecture lie in their primary functions and the manner in which they process data. CPUs, or central processing units, are designed to handle general-purpose computations, while GPUs, or graphics processing units, are specifically optimized for parallel processing of large amounts of data, such as in graphical and scientific applications.

While CPUs use a von Neumann architecture, which stores both data and instructions in the same memory, GPUs use a different architecture known as the stream processor. This architecture allows for efficient parallel processing of large datasets, as it is specifically designed to handle multiple operations simultaneously.

In addition, GPUs are equipped with a large number of small processing cores, which allows them to handle a vast array of parallel tasks. In contrast, CPUs typically have fewer, more powerful cores that are better suited for handling complex, single-threaded operations.

Furthermore, GPUs are designed to work in conjunction with the CPU, rather than replacing it. While GPUs can perform many of the same operations as CPUs, they are not as well-suited for tasks that require complex, single-threaded processing. Instead, they excel at handling large amounts of data in parallel, making them ideal for applications such as deep learning, scientific simulations, and video processing.

Overall, the differences between CPU and GPU architecture lie in their primary functions, their architecture, and the types of tasks they are best suited for. Understanding these differences is crucial for choosing the right hardware for a given task and maximizing the performance of a system.

The Components of GPU Architecture

GPU architecture is optimized for parallel processing of large amounts of data, making them ideal for applications such as deep learning, scientific simulations, and video processing. GPUs are equipped with CUDA cores, streaming multiprocessors, and memory hierarchy, all of which contribute to their high performance. Memory hierarchy in GPUs allows for efficient access to shared data, reducing the need for constant memory accesses and increasing performance. The design of the register file has a significant impact on the performance of the GPU. Parallel computing with GPUs is achieved through the use of blocks, threads, and grids. Synchronization is a critical aspect of parallel computing with GPUs, and understanding the different types of synchronization techniques and their usage is essential for efficient parallel computing. GPUs have found use in a wide range of scientific computing applications, including fluid dynamics simulation, molecular dynamics simulation, climate modeling, and more. The future of GPU architecture is looking bright, with emerging trends in MIMD architecture, heterogeneous computing, memory bandwidth optimization, quantum computing, and neuromorphic computing. The impact of AI and ML on GPU architecture is significant, and we can expect to see continued innovation in this area as demand for more powerful and efficient hardware for AI and ML workloads grows.

CUDA Cores

CUDA cores are the processing units within a GPU that are responsible for executing the majority of the computations. They are the fundamental building blocks of a GPU and are designed to handle multiple threads concurrently. The design of CUDA cores is crucial to the overall performance of a GPU, as it determines the number of operations that can be performed in parallel.

The key characteristics of CUDA cores include:

- Parallel Processing: CUDA cores are designed to handle multiple threads simultaneously, allowing for highly parallel computations. This enables the GPU to process a large number of calculations in parallel, which is essential for achieving high performance in tasks such as image processing and scientific simulations.

- Single Instruction, Multiple Data (SIMD): CUDA cores utilize the SIMD architecture, which allows for the execution of the same operation on multiple data elements simultaneously. This significantly increases the throughput of the GPU and enables it to perform more computations per clock cycle.

- Shared Memory: CUDA cores have access to a shared memory system, which allows them to share data and reduce the need for global memory accesses. This helps to reduce the overall memory latency and improves the performance of the GPU.

- Stream Processors: CUDA cores are also referred to as stream processors, as they are capable of processing multiple threads in parallel. Each stream processor can execute a single instruction on multiple data elements simultaneously, making them highly efficient for parallel computations.

Overall, the design of CUDA cores is critical to the performance of a GPU, as it determines the number of computations that can be performed in parallel. By understanding the characteristics of CUDA cores, it is possible to optimize the performance of a GPU and achieve high levels of parallelism in computations.

Memory Hierarchy

GPUs rely heavily on memory hierarchy to manage and access data efficiently. The memory hierarchy in GPUs is designed to ensure that data is stored and retrieved in the most optimized manner possible. In this section, we will explore the different levels of memory hierarchy in GPUs and their roles in the overall architecture.

Levels of Memory Hierarchy

The memory hierarchy in GPUs can be divided into several levels, each serving a specific purpose. The levels include:

- Register File: This is the smallest and fastest memory unit in the GPU architecture. Registers are used to store data that is frequently accessed by the processor. The size of the register file is limited, so it is essential to choose the most critical data to be stored in the registers.

- Shared Memory: Shared memory is a type of cache memory that is shared among all threads in a block. It is faster than global memory but slower than registers. Shared memory is used to store data that is frequently accessed by multiple threads in a block.

- Local Memory: Local memory is a type of cache memory that is private to each thread in a block. It is slower than shared memory but faster than global memory. Local memory is used to store data that is frequently accessed by a single thread in a block.

- Global Memory: Global memory is the main memory in the GPU architecture. It is the slowest memory unit but has the largest capacity. Global memory is used to store data that is not frequently accessed or does not fit in the other levels of memory hierarchy.

Impact on Performance

The memory hierarchy in GPUs has a significant impact on performance. Accessing data from faster memory units like registers and shared memory is much faster than accessing data from slower memory units like global memory. Therefore, it is crucial to design algorithms that take advantage of the memory hierarchy to maximize performance.

In addition, the size of the memory hierarchy also affects performance. Larger memory hierarchies allow for more efficient use of memory, but they also increase the complexity of the architecture. Balancing the size of the memory hierarchy with the performance requirements of the application is an important design consideration.

In conclusion, understanding the memory hierarchy in GPUs is essential for designing efficient algorithms and optimizing performance. The different levels of memory hierarchy in GPUs serve specific purposes, and understanding their roles can help developers design algorithms that take advantage of the memory hierarchy to maximize performance.

Streaming Multiprocessors

Streaming Multiprocessors (SMPs) are a critical component of the GPU architecture, responsible for performing parallel computations on large datasets. SMPs are designed to process multiple threads concurrently, making them highly efficient for tasks such as image processing, scientific simulations, and deep learning.

Each SMP consists of a set of processing elements (PEs), which are the basic computational units of the GPU. PEs are highly parallel and can perform the same operation on different data elements simultaneously. SMPs are connected through a high-speed interconnect network, which allows PEs to communicate and synchronize their operations.

The architecture of SMPs is highly modular, with each PE having its own local memory and register file. This modularity allows SMPs to be organized in different configurations depending on the specific requirements of the application. For example, in a deep learning application, the SMPs may be organized in a 2D or 3D grid to optimize the computation of convolutional neural networks.

SMPs are also designed to support dynamic task scheduling, which allows the GPU to efficiently switch between different applications and workloads. This flexibility is particularly important in modern GPUs, which are used not only for graphics rendering but also for general-purpose computing.

Overall, the design of SMPs in GPUs enables highly parallel and efficient computation, making them an essential component of modern computing architectures.

Raster Engine

The Raster Engine is a key component of the GPU architecture that is responsible for rendering 2D graphics on the screen. It is designed to process large amounts of pixel data quickly and efficiently, making it an essential part of the GPU’s ability to handle complex graphics and video applications.

The Raster Engine operates by receiving primitives, or small blocks of data, from the vertex and fragment processors. These primitives are then transformed and filtered, and the resulting pixel data is written to the frame buffer. The Raster Engine also handles other tasks such as depth buffering, which ensures that overlapping objects are rendered correctly, and alpha blending, which combines multiple transparent objects into a single image.

One of the key features of the Raster Engine is its ability to perform multiple operations in parallel. This is achieved through the use of pipelining, where the different stages of the rendering process are divided into separate stages that can be executed simultaneously. This allows the Raster Engine to process large amounts of data quickly and efficiently, making it a critical component of the GPU’s overall performance.

In addition to its core rendering functions, the Raster Engine also includes a number of specialized features that are designed to optimize performance in specific applications. For example, it includes support for hardware-accelerated decoding of video streams, which can offload the workload from the CPU and improve overall system performance.

Overall, the Raster Engine is a critical component of the GPU architecture that is responsible for rendering 2D graphics on the screen. Its ability to perform multiple operations in parallel and its specialized features make it an essential part of the GPU’s ability to handle complex graphics and video applications.

GPU Memory Management

Global Memory

Global memory, also known as shared memory, is a type of memory that is accessible by all threads within a block on a GPU. It is used to store data that is frequently accessed by multiple threads, such as textures, frames, or images. This allows for efficient access to shared data, reducing the need for constant memory accesses and increasing performance.

Global memory is divided into pages, which are small blocks of memory that can be accessed by different threads within a block. The size of a page is determined by the GPU and can vary depending on the architecture and the application. Each thread within a block can access a portion of the global memory page, which is mapped to a specific location within the thread’s local memory.

Global memory is managed by the GPU, which handles the allocation and deallocation of memory pages for each block. The GPU also handles the transfer of data between global memory and other memory types, such as local memory or constant memory.

The size of the global memory on a GPU is typically larger than the local memory, which allows for more efficient access to shared data. However, it is also more expensive to access global memory than local memory, as it requires coordination between threads and can lead to contention for access to the same data.

Overall, global memory is an important aspect of GPU memory management, providing a way for threads to access shared data efficiently and improving performance in applications that require frequent access to common data.

Local Memory

Local memory, also known as on-chip memory, is a type of memory that is located on the same chip as the GPU processing cores. This memory is used to store data that is being processed by the GPU, and it is typically faster and more expensive than off-chip memory.

Local memory is divided into multiple banks, each of which can be accessed independently by the processing cores. This allows for simultaneous access to different parts of the memory, which can improve the performance of the GPU.

The size of local memory varies depending on the GPU model, but it is typically smaller than the off-chip memory. This is because local memory is more expensive and has a higher bandwidth, so it is used for the most frequently accessed data.

Local memory is managed by the GPU itself, and it is used for tasks that require low latency and high bandwidth, such as texture mapping and pixel shading. Because of its fast access time and high bandwidth, local memory is often used for data that needs to be accessed frequently and in real-time, such as game graphics and video processing.

Overall, local memory is an important component of the GPU architecture, and it plays a crucial role in the performance of the GPU. Understanding how local memory works and how it is managed can help programmers and developers optimize their code and improve the performance of their applications.

Register File

A register file is a small amount of fast memory located within the GPU’s core. It is used to store frequently accessed data and is used to store data that is being processed by the GPU’s ALUs (Arithmetic Logic Units). The register file is an essential component of the GPU’s memory hierarchy, and its design has a significant impact on the performance of the GPU.

The register file is typically organized as an array of registers, with each register having a unique address. The registers are typically 32-bit or 64-bit wide, and are used to store data of various sizes, depending on the requirements of the operation being performed.

The register file is designed to be highly parallel, with multiple registers being accessed simultaneously by the ALUs. This allows the GPU to perform many operations in parallel, which is a key factor in its high performance.

In addition to storing data for processing, the register file is also used to store intermediate results during the execution of complex algorithms. This allows the GPU to perform more complex computations than would be possible with a simple memory hierarchy.

The design of the register file is a critical aspect of the GPU’s architectural design, and it plays a key role in determining the performance of the GPU. In the next section, we will explore the specific design of the register file and how it impacts the performance of the GPU.

Parallel Computing with GPUs

Block, Thread, and Grid

The key to unlocking the power of GPUs lies in their ability to perform parallel computing. Parallel computing involves dividing a task into smaller parts and executing them simultaneously, allowing for faster processing times. In the context of GPUs, parallel computing is achieved through the use of blocks, threads, and grids.

Block

A block is the smallest unit of parallelism in a GPU. It is a group of threads that are executed together on a single CUDA core. Blocks are the building blocks of parallelism on a GPU, and they are the fundamental unit of parallel execution. Each block has its own set of thread IDs, and all threads within a block are executed concurrently.

Thread

A thread is a single line of execution in a program. In the context of GPUs, threads are the individual units of work that are executed by a CUDA core. Threads are the basic unit of parallelism on a GPU, and they are the smallest unit of work that can be executed by a CUDA core.

Grid

A grid is a collection of blocks that are executed together on a GPU. A grid is the highest level of parallelism on a GPU, and it is the collection of all the blocks that are executing on the GPU. Each block in a grid is executed concurrently, and all threads within a block are executed concurrently.

In summary, blocks are the smallest unit of parallelism on a GPU, threads are the individual units of work that are executed by a CUDA core, and grids are the collection of all the blocks that are executing on the GPU. Understanding these concepts is essential for effectively utilizing the power of GPUs for parallel computing.

Shared Memory

Introduction to Shared Memory

In the realm of parallel computing, shared memory is a crucial concept that allows multiple processing cores to access the same memory locations concurrently. This feature is particularly relevant when discussing the architectural design of Graphics Processing Units (GPUs), which are known for their ability to perform parallel computations efficiently. By leveraging shared memory, GPUs can achieve remarkable levels of performance and scalability, making them ideal for a wide range of applications.

How Shared Memory Works in GPUs

Shared memory in GPUs operates on a per-block basis. Each block consists of multiple threads that execute the same or similar instructions in parallel. In order to utilize shared memory effectively, threads within a block must communicate and synchronize their activities. This coordination is managed through the use of hardware components called synchronization primitives, which enforce rules and constraints on the order of memory accesses.

Advantages of Shared Memory in GPUs

The utilization of shared memory in GPUs offers several advantages over alternative memory architectures:

- Reduced Latency: Since threads within a block can access shared memory directly, there is no need for data to be explicitly transferred between different memory domains. This results in reduced latency and faster overall computation times.

- Improved Efficiency: With shared memory, GPUs can exploit data locality more effectively. When multiple threads are processing different elements of a large dataset, they can all access the same memory locations, reducing the need for costly global memory transactions.

- Enhanced Scalability: The use of shared memory allows GPUs to scale better with increasing numbers of cores. As more cores are added to a GPU, the efficiency gains from shared memory become more pronounced, enabling the GPU to handle more complex workloads.

Challenges and Limitations of Shared Memory in GPUs

While shared memory provides significant benefits to GPU architectures, it also presents some challenges and limitations:

- Synchronization Complexity: Ensuring proper synchronization of threads within a block can be complex and may introduce performance bottlenecks if not managed effectively.

- Shared Memory Contention: As more threads attempt to access shared memory, contention for these resources can arise, leading to reduced performance and potential deadlocks.

- Fragmentation and Coalescing: Over time, memory access patterns can become fragmented, leading to inefficient memory usage. GPUs employ techniques like memory coalescing to mitigate these issues, but they add complexity to the memory subsystem.

In summary, shared memory is a crucial component of GPU architectures, enabling efficient parallel computations and scalability. However, its effective utilization requires careful management of synchronization and memory access patterns to overcome challenges and limitations.

Barriers and Synchronization

In parallel computing, synchronization is a critical aspect to ensure that the various processing elements work in harmony towards achieving the desired outcome. Synchronization in GPUs involves coordinating the activities of the threads and ensuring that they are executed in the correct order.

There are two types of synchronization in GPUs: explicit and implicit. Explicit synchronization is initiated by the thread itself, while implicit synchronization is performed by the GPU. Explicit synchronization is used when a thread needs to wait for the completion of a particular operation, while implicit synchronization is used when threads are executing the same operation concurrently.

Barriers are used in GPUs to enforce synchronization and ensure that all threads have completed a particular operation before proceeding to the next one. A barrier is a hardware construct that requires all threads to halt their execution until a specific condition is met. Once the condition is met, the threads are released to continue their execution.

Barriers can be categorized into two types: thread-level and kernel-level barriers. Thread-level barriers are used to synchronize threads within a single thread block, while kernel-level barriers are used to synchronize threads across multiple thread blocks.

In addition to barriers, GPUs also use other synchronization techniques such as fences and latches to ensure that threads are executed in the correct order. Fences are used to signal the completion of a particular operation, while latches are used to ensure that a particular condition is met before proceeding with the next operation.

Overall, synchronization is a critical aspect of parallel computing with GPUs, and understanding the different types of synchronization techniques and their usage is essential for efficient parallel computing.

Applications of GPUs

Graphics and Display

Graphics Processing Units (GPUs) have revolutionized the field of computer graphics by providing an efficient way to render complex images and animations. They are designed to handle large amounts of data and perform complex calculations at high speeds, making them ideal for applications that require high-performance graphics rendering.

In the world of computer graphics, GPUs are used for a wide range of applications, including:

- Game Development: GPUs are used to render high-quality graphics in video games, providing a more immersive gaming experience. They can handle complex scenes with thousands of objects and textures, enabling developers to create detailed and realistic game worlds.

- Virtual Reality (VR) and Augmented Reality (AR): GPUs play a crucial role in rendering the graphics for VR and AR applications, providing a seamless and immersive experience for users. They are capable of rendering complex 3D environments and handling real-time interactions between virtual objects and the real world.

- 3D Modeling and Animation: GPUs are used to render 3D models and animations, providing a faster and more efficient way to create high-quality graphics. They can handle large datasets and perform complex calculations at high speeds, enabling artists and designers to create detailed and realistic 3D graphics.

- Video Editing and Post-Production: GPUs are used in video editing and post-production to accelerate the rendering process, enabling editors to work more efficiently. They can handle large amounts of data and perform complex calculations at high speeds, allowing editors to work with high-resolution video footage and create high-quality visual effects.

Overall, GPUs have become an essential tool for the graphics and display industry, providing a powerful and efficient way to render complex images and animations. They have revolutionized the field of computer graphics, enabling artists and designers to create high-quality graphics and immersive experiences for users.

Deep Learning and Artificial Intelligence

Deep learning and artificial intelligence (AI) are two of the most important applications of GPUs. These applications require large-scale matrix computations, which can be efficiently performed on GPUs due to their highly parallel architecture.

Deep Learning

Deep learning is a subset of machine learning that involves training artificial neural networks to recognize patterns in data. These neural networks are composed of multiple layers of interconnected nodes, which are trained using large amounts of data. The training process involves performing matrix computations on the data, which can be efficiently performed on GPUs.

GPUs are particularly well-suited for deep learning because they can perform many parallel operations simultaneously. This means that they can process large amounts of data quickly, making them ideal for training deep neural networks. Additionally, GPUs are designed to handle highly parallel operations, which are common in deep learning.

Artificial Intelligence

Artificial intelligence (AI) is a field of computer science that involves developing intelligent machines that can perform tasks that typically require human intelligence. AI applications include natural language processing, computer vision, and robotics.

Like deep learning, AI applications often require large-scale matrix computations, which can be efficiently performed on GPUs. In addition, GPUs are well-suited for handling the highly parallel operations required in AI applications.

Overall, GPUs have become an essential tool for deep learning and AI researchers due to their ability to perform large-scale matrix computations efficiently. As the demand for AI and deep learning applications continues to grow, the importance of GPUs in these fields is likely to increase as well.

Scientific Computing and Simulation

GPUs have revolutionized the field of scientific computing and simulation by providing an efficient and cost-effective solution for complex calculations. The parallel processing capabilities of GPUs make them ideal for solving large-scale problems in fields such as physics, chemistry, and biology.

Fluid Dynamics Simulation

One of the most important applications of GPUs in scientific computing is fluid dynamics simulation. Fluid dynamics simulation involves modeling the behavior of fluids in motion, which is crucial for many engineering and scientific applications. With the ability to perform billions of calculations per second, GPUs can significantly reduce the time required for fluid dynamics simulations.

Molecular Dynamics Simulation

Molecular dynamics simulation is another important application of GPUs in scientific computing. This involves simulating the behavior of atoms and molecules in a system, which is essential for understanding many physical and chemical processes. The high computational power of GPUs makes it possible to perform molecular dynamics simulations on large systems, which was previously impossible with traditional CPU-based methods.

Climate Modeling

Climate modeling is another area where GPUs have had a significant impact. Climate models are used to simulate the behavior of the Earth’s climate, including temperature, precipitation, and wind patterns. With the ability to perform complex calculations at lightning-fast speeds, GPUs have enabled researchers to create more accurate climate models and better understand the effects of climate change.

Other Applications

In addition to fluid dynamics, molecular dynamics, and climate modeling, GPUs have also found use in a wide range of other scientific computing applications. These include materials science, structural biology, and astronomy, among others.

Overall, the ability of GPUs to perform massive parallel processing has made them an essential tool for scientific computing and simulation. With their ability to solve complex problems at unprecedented speeds, GPUs are set to play an even more important role in scientific research in the years to come.

The Future of GPU Architecture

Emerging Trends in GPU Design

GPUs have come a long way since their inception, and the future of GPU architecture is looking brighter than ever. In this section, we will discuss some of the emerging trends in GPU design that are expected to shape the future of graphics processing.

Multi-Instruction, Multi-Data (MIMD) Architecture

One of the emerging trends in GPU design is the adoption of Multi-Instruction, Multi-Data (MIMD) architecture. This architecture allows multiple processing elements to execute different instructions on different data simultaneously, which leads to significant performance improvements.

Heterogeneous Computing

Another trend in GPU design is the adoption of heterogeneous computing. This architecture combines different types of processing elements, such as CPUs, GPUs, and FPGAs, to work together on a single task. This approach enables more efficient use of resources and better performance.

Memory Bandwidth Optimization

Memory bandwidth is a critical factor in GPU performance, and optimizing memory bandwidth is an emerging trend in GPU design. Techniques such as cache hierarchies, hardware prefetching, and software prefetching are being used to improve memory bandwidth utilization and reduce memory bottlenecks.

Quantum Computing

Quantum computing is an emerging field that has the potential to revolutionize computing as we know it. While quantum computing is still in its infancy, GPUs are well-suited to handle the massive computational requirements of quantum algorithms. As a result, GPUs are being explored as a potential platform for quantum computing.

Neuromorphic Computing

Neuromorphic computing is an emerging field that aims to create computing systems that mimic the human brain. GPUs are well-suited to handle the massive computational requirements of neuromorphic algorithms, and they are being explored as a potential platform for neuromorphic computing.

In conclusion, the future of GPU architecture is looking bright, with emerging trends in MIMD architecture, heterogeneous computing, memory bandwidth optimization, quantum computing, and neuromorphic computing. These trends are expected to shape the future of graphics processing and drive the development of new and innovative applications.

The Impact of AI and Machine Learning on GPU Architecture

As the field of artificial intelligence (AI) and machine learning (ML) continues to grow and evolve, so too does the role of GPUs in these areas. The demand for more powerful and efficient hardware for AI and ML has led to significant advancements in GPU architecture. In this section, we will explore the impact of AI and ML on GPU architecture and how these changes are shaping the future of GPUs.

The Growing Importance of AI and ML

The increasing popularity of AI and ML has led to a significant increase in the demand for hardware that can handle the complex computations required for these tasks. GPUs, with their parallel processing capabilities, have become a popular choice for AI and ML workloads. As a result, GPUs are now being designed with AI and ML in mind, with architectures that are optimized for these types of workloads.

Architectural Changes for AI and ML

One of the key changes in GPU architecture for AI and ML is the addition of tensor processing units (TPUs). TPUs are specialized processing cores that are designed specifically for AI and ML workloads. They are capable of performing matrix multiplications, which are a fundamental operation in deep learning, much faster than traditional GPU cores. This allows for more efficient training and inference of deep neural networks, leading to faster performance and reduced power consumption.

Another significant change in GPU architecture for AI and ML is the use of mixed-precision computing. This involves using lower-precision floating-point numbers for certain computations, which can reduce the amount of memory required and improve performance. This is particularly important for ML workloads, where the sheer volume of data can make memory a limiting factor.

The Future of GPU Architecture for AI and ML

As AI and ML continue to advance, we can expect to see further changes in GPU architecture. One area of focus is likely to be on reducing the latency of AI and ML workloads, which can be a bottleneck for real-time applications. This may involve further optimizations to memory hierarchy and cache design, as well as new hardware accelerators specifically designed for AI and ML tasks.

Another area of focus is likely to be on increasing the flexibility and programmability of GPUs for AI and ML workloads. This may involve new programming models and APIs that make it easier to develop and deploy AI and ML applications on GPUs.

Overall, the impact of AI and ML on GPU architecture is significant, and we can expect to see continued innovation in this area as demand for more powerful and efficient hardware for AI and ML workloads grows.

The Challenge of Energy Efficiency in GPU Design

As the demand for more powerful and energy-efficient graphics processing units (GPUs) continues to rise, the challenge of designing GPUs that are both high-performing and energy-efficient has become increasingly pressing. In this section, we will explore the factors that contribute to the energy efficiency of GPUs and the strategies that GPU manufacturers are employing to meet this challenge.

Factors Contributing to Energy Efficiency in GPU Design

- Architecture: The architecture of a GPU plays a crucial role in determining its energy efficiency. The design of the GPU’s core, memory hierarchy, and interconnects can significantly impact the energy consumption of the device.

- Process Technology: The fabrication process used to manufacture the GPU can also have a significant impact on its energy efficiency. The transition to smaller process nodes (e.g., from 28nm to 14nm) can lead to significant improvements in energy efficiency, as the reduction in transistor size can result in lower power consumption.

- Workload Optimization: The ability to optimize the workload distribution within the GPU can also impact its energy efficiency. By optimizing the use of various GPU components, such as the core, memory, and interconnects, it is possible to reduce the overall energy consumption of the device.

Strategies for Improving Energy Efficiency in GPU Design

- Dynamic Power Management: One strategy for improving energy efficiency in GPU design is the use of dynamic power management techniques. These techniques allow the GPU to adjust its power consumption based on the workload it is processing, dynamically scaling up or down as needed to optimize performance and energy efficiency.

- Efficient Memory Access: Another strategy for improving energy efficiency in GPU design is to optimize memory access patterns. By reducing the number of memory accesses required to process a workload, it is possible to reduce the overall energy consumption of the GPU.

- Parallel Processing: Parallel processing is another key strategy for improving energy efficiency in GPU design. By dividing workloads into smaller, parallelizable tasks, GPUs can process multiple tasks simultaneously, reducing the overall energy consumption of the device.

In conclusion, the challenge of energy efficiency in GPU design is a complex issue that requires a multi-faceted approach. By considering factors such as architecture, process technology, and workload optimization, as well as employing strategies such as dynamic power management, efficient memory access, and parallel processing, GPU manufacturers can design devices that are both high-performing and energy-efficient.

FAQs

1. What is the purpose of GPU architecture?

The purpose of GPU architecture is to design and optimize the processing capabilities of graphics cards, with the aim of efficiently rendering images and videos on various devices such as computers, smartphones, and gaming consoles. The architecture of a GPU is specifically designed to handle large amounts of data parallel processing, which is crucial for tasks such as rendering complex 3D graphics and video encoding/decoding.

2. What are the key components of GPU architecture?

The key components of GPU architecture include processing cores, memory units, and interconnects. Processing cores are responsible for executing instructions and performing calculations, while memory units store data and provide quick access to it. Interconnects facilitate communication between processing cores and memory units, ensuring that data can be efficiently shared and processed.

3. How does GPU architecture differ from CPU architecture?

GPU architecture differs from CPU architecture in several ways. GPUs are designed to handle highly parallelizable tasks, such as rendering images and videos, whereas CPUs are designed to handle more complex, non-parallelizable tasks, such as running operating systems and executing application code. Additionally, GPUs typically have many more processing cores than CPUs, which allows them to perform certain types of calculations much faster.

4. What are some common types of GPU architectures?

Some common types of GPU architectures include CUDA, OpenCL, and DirectX. CUDA is a parallel computing platform and programming model developed by NVIDIA, while OpenCL is an open standard for programming GPUs developed by the Khronos Group. DirectX is a collection of application programming interfaces (APIs) developed by Microsoft for game developers.

5. How do GPUs benefit from parallel processing?

GPUs benefit from parallel processing because it allows them to perform many calculations simultaneously. By dividing up a task into smaller, independent sub-tasks, GPUs can distribute the workload across thousands of processing cores, resulting in much faster processing times than would be possible with a single core. This is especially important for tasks such as rendering images and videos, which require the processing of large amounts of data.

6. Can GPUs be used for tasks beyond graphics rendering?

Yes, GPUs can be used for tasks beyond graphics rendering. With the advent of machine learning and deep learning, GPUs have become essential for training neural networks and running complex algorithms. Additionally, GPUs can be used for general-purpose computing, such as scientific simulations and data analysis.