Are you in the market for a new graphics card for your architecture workstation? With so many options available, it can be challenging to decide which one is the best fit for your needs. Two popular choices are the RTX and GTX graphics cards. But which one is better for architecture? In this article, we will explore the architectural differences between these two graphics cards and help you make an informed decision.

Introduction to Graphics Card Architecture

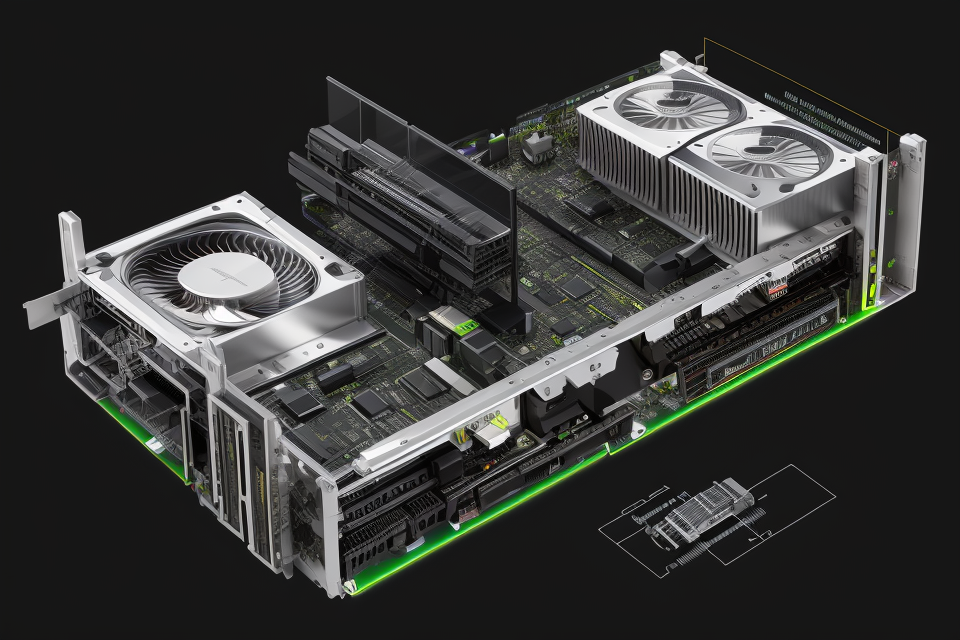

Overview of Graphics Processing Units (GPUs)

A Graphics Processing Unit (GPU) is a specialized microprocessor designed to accelerate the creation and rendering of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles for a wide range of applications. They are used in applications ranging from the simple display of text and static images to the complex rendering of 3D scenes and video content.

GPUs are designed to perform complex mathematical calculations that are required to render images, video, and 3D scenes. They are designed to perform these calculations in parallel, which allows them to perform these calculations much faster than a traditional central processing unit (CPU). GPUs are also designed to be highly efficient, which means they consume less power and generate less heat than a CPU.

One of the key differences between RTX and GTX graphics cards is the architecture of the GPU. The architecture of a GPU determines how it performs these complex mathematical calculations and how it interacts with other components in the system. The architecture of a GPU is a critical factor in determining its performance, power efficiency, and cost.

The Role of Graphics Cards in Modern Computing

In today’s world, graphics cards play a crucial role in modern computing. They are responsible for rendering images and video, running complex simulations, and driving the graphics in video games. The role of graphics cards has become increasingly important as the demand for more realistic and immersive visual experiences has grown.

Graphics cards are an essential component of a computer’s hardware, and they are used in a wide range of applications, from basic desktop computing to advanced scientific simulations and gaming. The graphics card is responsible for rendering images and video, which means it converts the data into a visual format that can be displayed on a screen. This process requires a lot of computational power, and graphics cards are designed to handle this workload.

One of the key factors that differentiate graphics cards is their performance. Graphics cards are rated based on their performance, and the most popular brands are NVIDIA and AMD. Both of these companies produce high-quality graphics cards that are designed to meet the needs of different users. NVIDIA and AMD both have a range of graphics cards, each with its own set of features and capabilities.

In addition to performance, graphics cards also vary in terms of their architecture. Graphics cards have a complex architecture that is designed to handle the demands of modern computing. This architecture includes a variety of components, such as the GPU (graphics processing unit), memory, and input/output interfaces. The architecture of a graphics card is an important factor in determining its performance, and different graphics cards have different architectures that are optimized for different tasks.

Overall, the role of graphics cards in modern computing cannot be overstated. They are essential components of a computer’s hardware, and they play a critical role in rendering images and video, running simulations, and driving the graphics in video games. Understanding the role of graphics cards and their architecture is essential for anyone who wants to build a high-performance computer or who wants to understand the technology behind modern graphics cards.

Understanding RTX and GTX Graphics Cards

Comparison of RTX and GTX Graphics Cards

RTX and GTX graphics cards are two distinct product lines from NVIDIA, each with its own unique features and capabilities. Here’s a closer look at the key differences between these two graphics card families:

- Architecture: RTX graphics cards are based on the Turing architecture, while GTX graphics cards are based on the earlier Maxwell architecture. The Turing architecture includes a number of improvements over the Maxwell architecture, including better performance per clock, support for more advanced graphics features, and improved power efficiency.

- CUDA Cores: RTX graphics cards feature a larger number of CUDA cores than GTX graphics cards. This means that RTX cards are better equipped to handle complex calculations and are generally more powerful than GTX cards.

- Memory: RTX graphics cards typically have more memory than GTX cards, which can be useful for tasks that require a lot of data to be processed simultaneously.

- RT cores: RTX graphics cards feature dedicated RT cores, which are used to accelerate real-time ray tracing. GTX graphics cards do not have RT cores and are not capable of real-time ray tracing.

- Tensor cores: RTX graphics cards also feature dedicated tensor cores, which are used to accelerate AI and machine learning workloads. GTX graphics cards do not have tensor cores.

- Power consumption: RTX graphics cards typically consume more power than GTX cards, due to their higher performance and additional features.

- Price: RTX graphics cards are generally more expensive than GTX cards, due to their advanced features and higher performance.

Overall, RTX graphics cards are a more advanced and powerful option for users who require the latest graphics technology, while GTX graphics cards are a more affordable option for users who do not need the latest features.

Differences in Architecture and Design

While both RTX and GTX graphics cards are designed to render images and process graphical data, there are notable differences in their architecture and design. Here are some key points to consider:

CUDA Cores

One of the most significant differences between RTX and GTX graphics cards is the number of CUDA cores they possess. CUDA (Compute Unified Device Architecture) cores are the processing units in NVIDIA GPUs that are responsible for executing calculations and rendering graphics. RTX graphics cards typically have more CUDA cores than GTX cards, which means they can handle more complex tasks and are better suited for demanding applications such as gaming, 3D modeling, and video editing.

RT Cores and Tensor Cores

RTX graphics cards also feature RT (Real-Time) cores and Tensor cores, which are not present in GTX cards. RT cores are designed to accelerate real-time ray tracing, while Tensor cores are optimized for AI and machine learning tasks. These additional cores enable RTX graphics cards to deliver more advanced lighting and shading effects, as well as faster training and inference times for AI models.

Memory Configuration

Another architectural difference between RTX and GTX graphics cards is their memory configuration. RTX cards usually have larger memory capacities than GTX cards, which allows them to handle more complex scenes and textures. Additionally, RTX cards may have faster memory speeds, which can improve performance in certain applications.

DisplayPort and HDMI Ports

Finally, RTX graphics cards often have more DisplayPort and HDMI ports than GTX cards. This means that RTX cards can support more displays, making them a better choice for users who need to connect multiple monitors. Additionally, the newer DisplayPort 1.4 standard supports higher resolutions and refresh rates, which is another advantage of RTX cards.

In summary, while both RTX and GTX graphics cards share many similarities in their architecture and design, there are notable differences in their performance capabilities. RTX cards generally offer better performance in demanding applications, thanks to their higher CUDA core count, additional RT and Tensor cores, larger memory capacities, and more DisplayPort and HDMI ports.

RT Cores and Tensor Cores

Overview of RT Cores and Tensor Cores

RTX graphics cards are equipped with a unique set of cores known as RT cores and tensor cores. These cores are specifically designed to handle real-time ray tracing and AI-accelerated workloads.

RT Cores

RT cores are specialized cores that are designed to accelerate real-time ray tracing. They are capable of performing complex mathematical calculations that are required to simulate light behavior in a virtual environment. RT cores use hardware-based acceleration to improve the performance of ray tracing, resulting in more realistic lighting and shadows in games and other applications.

Tensor Cores

Tensor cores are designed to accelerate AI-based workloads such as deep learning, machine learning, and neural networks. They are capable of performing matrix multiplications and other complex mathematical operations required for AI computations. Tensor cores use hardware-based acceleration to improve the performance of AI-based workloads, resulting in faster processing times and improved performance.

Overall, the combination of RT cores and tensor cores in RTX graphics cards provides a significant advantage over GTX graphics cards when it comes to real-time ray tracing and AI-accelerated workloads. These cores enable RTX graphics cards to deliver more realistic lighting and shadows in games and other applications, as well as faster processing times for AI-based workloads.

How RT Cores and Tensor Cores Enhance Performance

RTX graphics cards are equipped with a unique feature known as RT cores and tensor cores, which set them apart from GTX graphics cards. These cores are designed to enhance the performance of the graphics card in specific tasks.

RT cores, also known as ray tracing cores, are designed specifically for real-time ray tracing. They are capable of processing millions of rays per second, which allows for more accurate and realistic lighting and shadows in games and other applications. This enhances the overall visual quality of the graphics and provides a more immersive experience for the user.

Tensor cores, on the other hand, are designed for artificial intelligence and machine learning tasks. They are capable of performing complex mathematical calculations at high speeds, which makes them ideal for tasks such as image recognition and natural language processing. This enables developers to create more sophisticated AI-powered applications and games.

Overall, the inclusion of RT cores and tensor cores in RTX graphics cards provides a significant performance boost in specific tasks, making them an attractive option for users who require high levels of graphics performance.

RT Core and Tensor Core Technology in RTX Graphics Cards

The RT Cores and Tensor Cores are two key technologies that distinguish RTX graphics cards from GTX graphics cards. RT Cores are designed to accelerate real-time ray tracing, while Tensor Cores are designed to accelerate artificial intelligence and machine learning tasks.

RT Cores are a new technology introduced by NVIDIA in their RTX graphics cards. They are designed to accelerate real-time ray tracing, which is a technique used to simulate the behavior of light in a scene. Ray tracing can produce more realistic lighting and shadows than traditional rasterization techniques, which are used in most games and graphics applications.

RT Cores are specialized processors that are designed to perform ray tracing calculations in parallel. They are capable of performing millions of calculations per second, which allows them to render complex ray tracing effects in real-time. RT Cores are also designed to be highly efficient, so they can operate at high speeds while consuming minimal power.

Tensor Cores are another new technology introduced by NVIDIA in their RTX graphics cards. They are designed to accelerate artificial intelligence and machine learning tasks, such as image recognition and natural language processing. Tensor Cores are based on NVIDIA’s Tensor Core architecture, which is optimized for deep learning algorithms.

Tensor Cores are specialized processors that are designed to perform matrix multiplication and other mathematical operations that are commonly used in deep learning. They are capable of performing these operations in parallel, which allows them to accelerate the training and inference of deep neural networks. Tensor Cores are also designed to be highly efficient, so they can operate at high speeds while consuming minimal power.

In summary, RT Cores and Tensor Cores are two key technologies that distinguish RTX graphics cards from GTX graphics cards. RT Cores are designed to accelerate real-time ray tracing, while Tensor Cores are designed to accelerate artificial intelligence and machine learning tasks. These technologies are highly specialized and optimized for specific tasks, which makes them well-suited for certain types of applications.

Benefits of RT Cores and Tensor Cores for Architectural Rendering

RTX graphics cards are equipped with two types of cores that set them apart from GTX cards: RT cores and tensor cores. RT cores are designed specifically for real-time ray tracing, while tensor cores are optimized for machine learning and AI workloads. Both types of cores offer significant benefits for architectural rendering.

Improved Realism and Accuracy

One of the main benefits of RT cores is their ability to simulate the behavior of light in a scene, creating more realistic and accurate renderings. This is particularly important in architectural rendering, where accuracy and attention to detail are critical. RT cores enable more realistic lighting and shadows, as well as more accurate reflections and refractions. This leads to more realistic and accurate representations of materials and textures, which is essential for architectural visualization.

Faster Rendering Times

In addition to improving the accuracy of renderings, RT cores also enable faster rendering times. This is because ray tracing allows for more efficient and accurate calculations of lighting and shadows, which can be processed in parallel by the RT cores. This can significantly reduce the time required for rendering complex architectural scenes, allowing for faster turnaround times and more efficient workflows.

Enhanced Machine Learning Capabilities

Tensor cores are also beneficial for architectural rendering, particularly when it comes to machine learning and AI applications. These cores are optimized for machine learning workloads, enabling faster training and inference times for deep learning models. This can be particularly useful for architectural applications that involve the use of AI and machine learning, such as generating realistic virtual environments or automating certain aspects of the rendering process.

Overall, the RT cores and tensor cores found in RTX graphics cards offer significant benefits for architectural rendering. These cores enable more realistic and accurate renderings, faster rendering times, and enhanced machine learning capabilities, making them an essential tool for architects and designers looking to create high-quality, realistic visualizations of their work.

Limitations of RT Cores and Tensor Cores in RTX Graphics Cards

Despite the impressive capabilities of RT cores and Tensor cores in RTX graphics cards, there are some limitations to their performance. Here are some of the key limitations:

- Limited Compatibility: Some games and applications may not be optimized for the use of RT cores and Tensor cores, which can limit their effectiveness. This can result in lower performance and a reduced ability to take advantage of the advanced features offered by these cores.

- Heat Management: The increased power consumption of RT cores and Tensor cores can generate more heat, which can be a challenge for cooling systems to manage. This can result in reduced performance or even damage to the graphics card if proper cooling measures are not taken.

- Higher Cost: The advanced technology used in RT cores and Tensor cores can make RTX graphics cards more expensive than their GTX counterparts. This can be a barrier for some users who may not have the budget to invest in an RTX graphics card.

- Limited Availability: Some of the more advanced features of RT cores and Tensor cores may not be available in all RTX graphics cards. This can limit their usefulness for certain applications and may require users to invest in higher-end models to take full advantage of these features.

Overall, while RT cores and Tensor cores offer significant advantages in terms of performance and capabilities, they are not without their limitations. It is important for users to carefully consider their needs and budget when choosing between an RTX and GTX graphics card.

RT Core and Tensor Core Technology in GTX Graphics Cards

RT cores and tensor cores are two of the most important architectural differences between RTX and GTX graphics cards. While both types of cards are designed to deliver high-quality graphics, they do so in different ways. In this section, we will explore the technology behind RT cores and tensor cores in GTX graphics cards.

RT cores are a type of processing unit that is specifically designed to accelerate real-time ray tracing. These cores are capable of performing complex calculations at high speeds, making them ideal for tasks that require real-time rendering. In GTX graphics cards, RT cores are typically used to enhance the overall performance of the card, particularly when it comes to ray tracing.

Tensor cores are another type of processing unit that is designed to accelerate AI and machine learning workloads. These cores are capable of performing matrix operations at high speeds, making them ideal for tasks that require large amounts of data processing. In GTX graphics cards, tensor cores are typically used to enhance the overall performance of the card, particularly when it comes to AI and machine learning tasks.

While both RT cores and tensor cores are important architectural differences between RTX and GTX graphics cards, they are used in different ways. RT cores are primarily used to enhance real-time ray tracing, while tensor cores are primarily used to enhance AI and machine learning workloads. However, it’s worth noting that some GTX graphics cards may include additional features or capabilities that are not found in RTX cards, such as support for multiple displays or hardware acceleration for certain types of games or applications.

RTX graphics cards are equipped with two major components that differentiate them from GTX cards: RT cores and tensor cores. RT cores are designed to accelerate real-time ray tracing, while tensor cores are optimized for artificial intelligence and machine learning tasks.

RT cores enable more realistic lighting and shadows in architectural rendering by simulating the behavior of light in a virtual environment. This technology provides a more accurate representation of how light interacts with different materials, which is essential for creating photorealistic visualizations. RT cores can also enhance global illumination, reflectance, and refraction, resulting in a more visually appealing and realistic representation of the final product.

Tensor cores, on the other hand, can improve the performance of AI-driven tasks, such as upscaling and denoising. Upscaling refers to the process of increasing the resolution of an image while maintaining its quality, which is useful for rendering high-quality architectural visualizations. Denoising involves removing noise from images, which can be helpful when working with scanned or low-quality data. Tensor cores can also be used for image recognition and object detection, which can aid in the design process by allowing architects to identify and analyze different aspects of their projects in real-time.

In summary, RT cores and tensor cores provide architectural rendering with several benefits, including more realistic lighting and shadows, improved global illumination, and enhanced AI-driven tasks such as upscaling and denoising. These features make RTX graphics cards a valuable tool for architects looking to create high-quality, photorealistic visualizations of their projects.

Limitations of RT Cores and Tensor Cores in GTX Graphics Cards

Although GTX graphics cards are capable of handling a wide range of tasks, they lack the advanced features of RTX graphics cards, including RT cores and tensor cores. These cores are responsible for real-time ray tracing and AI acceleration, respectively, and their absence limits the performance of GTX graphics cards in certain scenarios.

One of the main limitations of GTX graphics cards is their inability to support hardware-accelerated ray tracing. This is because GTX graphics cards do not have RT cores, which are designed specifically for this purpose. Instead, they rely on software-based rendering techniques that can be less efficient and may not produce the same level of realism as hardware-accelerated ray tracing.

Another limitation of GTX graphics cards is their lack of tensor cores. These cores are designed to accelerate AI workloads, such as machine learning and deep learning, by performing matrix multiplications and other mathematical operations at high speeds. The absence of tensor cores means that GTX graphics cards may not be able to perform these tasks as efficiently as RTX graphics cards, which can impact their performance in certain applications.

In summary, the limitations of RT cores and tensor cores in GTX graphics cards mean that they may not be able to handle certain tasks as efficiently as RTX graphics cards. This can impact their performance in applications that require real-time ray tracing or AI acceleration, such as gaming, content creation, and scientific simulations.

Ray Tracing and Global Illumination

Overview of Ray Tracing and Global Illumination

Ray tracing is a technique used in computer graphics to simulate the behavior of light. It is a complex process that involves tracing the path of light rays as they bounce off surfaces and interact with objects in a scene. The goal of ray tracing is to produce realistic lighting and shadows that accurately reflect the physical properties of the objects in the scene.

Global illumination is a type of ray tracing that takes into account the interaction of light with the entire environment. It simulates the way light bounces around a scene, taking into account the reflections, refractions, and absorption of light by objects. This produces a more accurate representation of the way light behaves in a real-world environment.

RTX graphics cards are designed to support real-time ray tracing, which means they can simulate the behavior of light in real-time as the scene changes. This allows for more realistic lighting and shadows in video games and other graphics applications. GTX graphics cards, on the other hand, do not support real-time ray tracing and rely on traditional rasterization techniques for rendering graphics.

One of the key differences between RTX and GTX graphics cards is the way they handle ray tracing and global illumination. RTX graphics cards are equipped with specialized hardware, such as Tensor cores and RT cores, that are optimized for real-time ray tracing. GTX graphics cards, on the other hand, do not have this specialized hardware and rely on the CPU to handle ray tracing and global illumination calculations. This can result in slower performance and less accurate lighting and shadows in applications that require real-time ray tracing.

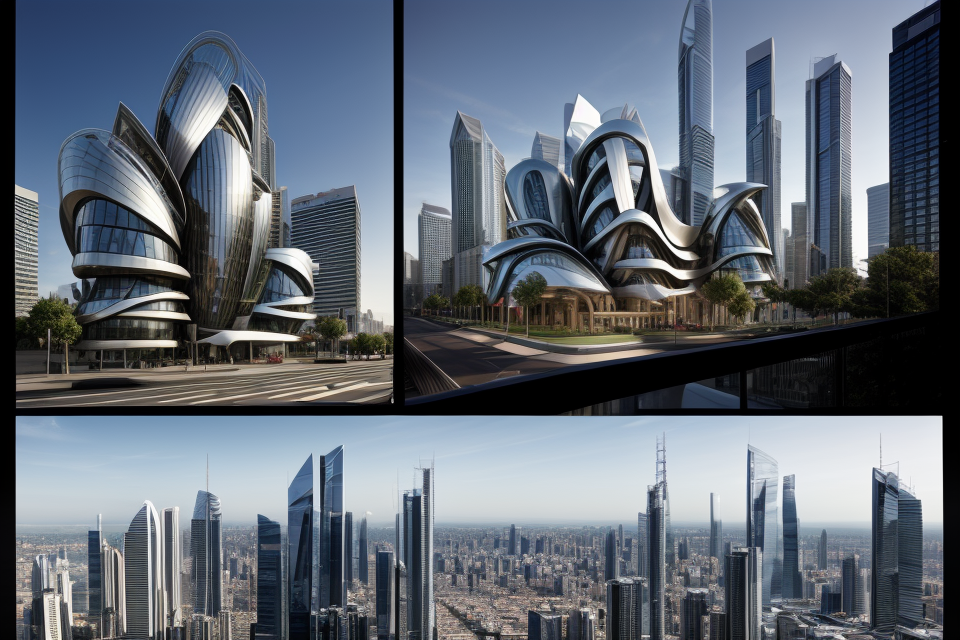

The Impact of Ray Tracing and Global Illumination on Architectural Visualization

Ray tracing and global illumination are two of the most important features of RTX graphics cards that have a significant impact on architectural visualization.

Improved Realism

Ray tracing and global illumination help to create more realistic lighting and shadows in architectural visualizations. This is particularly important for interior and exterior spaces, where lighting can have a significant impact on the overall appearance of the design. With RTX graphics cards, architects and designers can create more accurate and realistic lighting simulations, which can help to improve the overall quality of their visualizations.

In addition to improving the realism of architectural visualizations, RTX graphics cards also offer faster rendering times. This is particularly important for large and complex designs, where rendering times can be prohibitively long. With RTX graphics cards, architects and designers can create high-quality visualizations faster, which can help to streamline the design process and improve productivity.

Advanced Shading and Reflection Effects

RTX graphics cards also offer advanced shading and reflection effects, which can help to enhance the overall realism of architectural visualizations. This is particularly important for designs that include reflective surfaces, such as glass or water. With RTX graphics cards, architects and designers can create more realistic reflections and shadows, which can help to improve the overall appearance of their visualizations.

Overall, the impact of ray tracing and global illumination on architectural visualization is significant. With RTX graphics cards, architects and designers can create more realistic and accurate visualizations, faster rendering times, and advanced shading and reflection effects. These features can help to improve the overall quality of architectural visualizations and enhance the design process.

Ray Tracing and Global Illumination in RTX Graphics Cards

RTX graphics cards, such as the NVIDIA GeForce RTX 3080, have a more advanced architecture for ray tracing and global illumination compared to GTX graphics cards. Ray tracing is a technique used to simulate the behavior of light in a scene, resulting in more realistic lighting and shadows. Global illumination takes into account lighting from all sources in a scene, providing a more accurate representation of how light interacts with objects.

One of the key differences between RTX and GTX graphics cards is the number of ray tracing cores. RTX graphics cards have dedicated ray tracing cores, while GTX graphics cards do not. This means that RTX graphics cards can perform ray tracing more efficiently and effectively, resulting in smoother and more realistic lighting effects.

Another difference is the amount of memory available for ray tracing. RTX graphics cards have more memory dedicated to ray tracing, allowing for more complex and detailed scenes to be rendered. This results in more realistic lighting and shadows, as well as improved performance in games and other applications that utilize ray tracing.

Additionally, RTX graphics cards have more advanced algorithms for global illumination, allowing for more accurate and realistic lighting in scenes. This is particularly noticeable in games and other applications that rely heavily on lighting effects, such as indoor environments or scenes with complex lighting setups.

Overall, the architectural differences between RTX and GTX graphics cards result in significant improvements in ray tracing and global illumination. These improvements lead to more realistic and immersive visuals, making RTX graphics cards a popular choice for gamers and professionals alike.

Comparison of Ray Tracing and Global Illumination in RTX and GTX Graphics Cards

Ray tracing is a rendering technique that simulates the behavior of light in a scene, creating more realistic shadows, reflections, and refractions. It requires a lot of processing power and is usually associated with high-end graphics cards like the RTX series. In contrast, global illumination is a lighting technique that calculates the interaction of light in a scene with objects and surfaces, creating more realistic lighting and shadows. While both techniques are used in 3D rendering, they have different levels of complexity and performance requirements.

Ray Tracing

*RTX graphics cards are specifically designed to handle the complex calculations required for ray tracing, providing more accurate and realistic lighting and shadows in 3D scenes.

* The RTX series uses specialized hardware called RT cores to accelerate ray tracing, providing a significant performance boost over GTX graphics cards.

* The latest RTX graphics cards also support hardware-accelerated ray tracing, which further improves performance and reduces the workload on the CPU.

Global Illumination

- While GTX graphics cards can also perform global illumination, they may not provide the same level of accuracy and realism as RTX graphics cards.

- GTX graphics cards may also struggle with complex scenes that require a high level of global illumination, leading to slower performance and reduced frame rates.

- RTX graphics cards, on the other hand, are specifically designed to handle the complex calculations required for global illumination, providing more accurate and realistic lighting and shadows in 3D scenes.

In summary, while both RTX and GTX graphics cards can perform global illumination, RTX graphics cards are specifically designed to handle the complex calculations required for ray tracing and provide a significant performance boost over GTX graphics cards. They also offer hardware-accelerated ray tracing, which further improves performance and reduces the workload on the CPU.

Ray Tracing and Global Illumination in GTX Graphics Cards

Ray tracing and global illumination are two of the most significant features of modern graphics cards. GTX graphics cards, in particular, have been widely used for their ability to handle complex lighting effects and realistic rendering. In this section, we will explore the architectural differences between RTX and GTX graphics cards with respect to ray tracing and global illumination.

How GTX Graphics Cards Handle Ray Tracing

GTX graphics cards use a technique called rasterization to simulate light interactions with objects in a scene. This technique involves transforming the scene into a series of polygons and shading each polygon based on its properties. While this method is efficient, it does not accurately simulate the behavior of light in a scene, resulting in less realistic reflections, refractions, and shadows.

The Role of Global Illumination in GTX Graphics Cards

Global illumination is a technique used to simulate the way light interacts with a scene as a whole, rather than just individual objects. In GTX graphics cards, global illumination is typically achieved through the use of simplified approximations, such as precomputed ambient occlusion or screen-space reflections. While these techniques can produce good results, they often require significant computational resources and may not be suitable for real-time applications.

Comparison with RTX Graphics Cards

In contrast to GTX graphics cards, RTX graphics cards use ray tracing to simulate light interactions with objects in a scene. This technique involves tracing the path of light rays as they bounce off surfaces and interact with objects in the scene. As a result, RTX graphics cards can produce more realistic reflections, refractions, and shadows, as well as more accurate global illumination.

RTX graphics cards also use advanced global illumination techniques, such as RTX global illumination, which can produce more accurate and efficient results than the approximations used in GTX graphics cards. These techniques are made possible by the dedicated RT cores and Tensor cores found in RTX graphics cards, which offload the computational workload from the CPU and allow for real-time ray tracing.

In summary, while GTX graphics cards are capable of handling complex lighting effects and global illumination, they do so through simplified approximations that can be computationally expensive and may not produce the same level of realism as RTX graphics cards. RTX graphics cards, on the other hand, use advanced ray tracing techniques and dedicated hardware to produce more accurate and efficient global illumination, resulting in more realistic and immersive rendering.

While both RTX and GTX graphics cards support traditional rasterization-based rendering, RTX cards have a significant advantage when it comes to ray tracing and global illumination. Ray tracing is a technique used to simulate the behavior of light in a scene, producing more realistic reflections, refractions, and shadows. Global illumination takes into account the interaction of light sources with the environment, providing more accurate lighting and shading.

Here’s a detailed comparison of ray tracing and global illumination in RTX and GTX graphics cards:

Ray Tracing

- RTX: RTX graphics cards feature dedicated hardware and software support for ray tracing, enabling them to perform this task more efficiently and effectively. NVIDIA’s RT cores (ray tracing cores) are specifically designed to accelerate ray tracing operations, allowing for smoother performance and higher frame rates.

- GTX: In contrast, GTX graphics cards rely on software-based rasterization techniques for ray tracing. While these techniques can produce decent results, they often come at the cost of performance, leading to lower frame rates and reduced visual quality.

Global Illumination

- RTX: RTX graphics cards utilize NVIDIA’s AI-accelerated global illumination technology, which uses deep learning algorithms to improve the accuracy and efficiency of global illumination calculations. This allows for more realistic lighting and shading, even in complex scenes with multiple light sources.

- GTX: GTX graphics cards do not have this advanced global illumination technology, relying instead on traditional lighting techniques. While these techniques can still produce good results, they may not be as accurate or efficient as those found in RTX cards.

Overall, the combination of dedicated hardware, advanced software, and AI-accelerated global illumination makes RTX graphics cards the superior choice for realistic ray tracing and global illumination in gaming and professional applications.

Importance of Ray Tracing and Global Illumination for Architectural Visualization

Ray tracing and global illumination are crucial techniques in architectural visualization, providing a more accurate representation of light interactions within a scene. These techniques are particularly important in architectural visualization because they help to create realistic and detailed images of buildings and their surroundings.

One of the main benefits of ray tracing and global illumination is that they can accurately simulate the behavior of light in a scene. This includes how light interacts with different materials, such as glass, metal, and wood, as well as how it is affected by the environment, such as sunlight and shadows. By using these techniques, architects and designers can create images that accurately represent how a building will look in different lighting conditions, which can be essential for making design decisions.

Another important aspect of ray tracing and global illumination is their ability to create realistic reflections and refractions. This is particularly important in architectural visualization because it can help to create a sense of depth and realism in images. For example, reflections in windows and mirrors can provide a glimpse of the surrounding environment, while refractions can create interesting effects on glass surfaces.

In addition to these benefits, ray tracing and global illumination can also help to improve the efficiency of the rendering process. By using these techniques, it is possible to create high-quality images more quickly than with other methods. This can be particularly important in architectural visualization, where designers often need to create multiple images of a building from different angles and lighting conditions.

Overall, the importance of ray tracing and global illumination in architectural visualization cannot be overstated. These techniques provide a more accurate representation of light interactions within a scene, which can help architects and designers to make better design decisions and create more realistic images of buildings and their surroundings.

CUDA and DirectX

Overview of CUDA and DirectX

CUDA (Compute Unified Device Architecture) and DirectX are two prominent technologies that enable the integration of graphics processing units (GPUs) with computers. These technologies facilitate communication between the GPU and the central processing unit (CPU), enabling efficient graphics rendering and other computational tasks.

CUDA is a parallel computing platform and programming model developed by NVIDIA for their GPUs. It allows developers to utilize the GPU’s vast parallel processing capabilities to perform general-purpose computing tasks. CUDA is designed to leverage the GPU’s parallel computing power, which is typically used for graphics rendering, to perform other computations more efficiently than with traditional CPUs. This technology is widely used in scientific simulations, artificial intelligence, and deep learning applications.

DirectX, on the other hand, is a collection of application programming interfaces (APIs) developed by Microsoft for handling multimedia tasks, particularly in the gaming industry. DirectX provides a standardized way for developers to access hardware components like GPUs, thereby simplifying the process of creating games and other multimedia applications. DirectX includes several components, such as Direct3D for 3D graphics rendering, Direct2D for 2D graphics, and DirectSound for audio processing.

Both CUDA and DirectX play critical roles in enhancing the performance of graphics cards. CUDA enables developers to tap into the GPU’s parallel processing capabilities for general-purpose computing tasks, while DirectX simplifies the process of creating multimedia applications, including games, by providing a standardized way to access hardware components. The integration of these technologies with graphics cards has led to significant improvements in graphics rendering, performance, and efficiency.

The Role of CUDA and DirectX in Graphics Card Performance

When it comes to graphics card performance, both CUDA and DirectX play a crucial role in the overall functionality of the card. CUDA, which stands for Compute Unified Device Architecture, is a parallel computing platform and programming model developed by NVIDIA. It allows developers to utilize the GPU for general-purpose computing, in addition to its traditional graphics rendering capabilities. DirectX, on the other hand, is a collection of application programming interfaces (APIs) developed by Microsoft for handling multimedia hardware on personal computers.

CUDA and Its Impact on Graphics Card Performance

CUDA enables developers to create programs that can be executed on NVIDIA GPUs, allowing for the use of parallel processing to improve performance in various applications. This includes tasks such as scientific simulations, financial modeling, and even video rendering. By leveraging the power of the GPU for these tasks, CUDA can significantly speed up processing times compared to relying solely on the CPU.

DirectX and Its Impact on Graphics Card Performance

DirectX, meanwhile, provides a standardized interface for hardware manufacturers to communicate with the operating system and software developers. It allows for the creation of hardware-accelerated graphics and audio, providing a consistent experience across different devices. In terms of graphics cards, DirectX ensures compatibility with games and other graphics-intensive applications, allowing for optimal performance and smooth gameplay.

Comparison of CUDA and DirectX in Graphics Card Performance

While CUDA and DirectX serve different purposes, they both play a critical role in the overall performance of graphics cards. CUDA enables the GPU to be utilized for general-purpose computing, providing a significant boost in processing power for certain tasks. DirectX, on the other hand, ensures compatibility and optimal performance in graphics-intensive applications, such as gaming. The combination of both technologies in modern graphics cards provides a powerful tool for both gaming and non-gaming applications.

CUDA and DirectX in RTX Graphics Cards

CUDA and DirectX in RTX Graphics Cards

CUDA (Compute Unified Device Architecture) and DirectX are two key technologies that are utilized by RTX graphics cards to enhance their performance. Both of these technologies play a crucial role in rendering images and video on a computer screen.

CUDA

CUDA is a parallel computing platform and programming model developed by NVIDIA. It allows software developers to utilize the parallel processing capabilities of RTX graphics cards to accelerate the performance of applications that require high levels of computation.

RTX graphics cards feature a large number of CUDA cores, which are responsible for performing complex mathematical calculations. These cores work in parallel to process multiple instructions simultaneously, enabling the graphics card to render images and video faster than a traditional graphics card.

DirectX

DirectX is a collection of application programming interfaces (APIs) developed by Microsoft that are used to enable high-performance multimedia on Windows-based computers. It includes a range of technologies, such as Direct3D, DirectSound, and DirectInput, that are used to render images, sound, and input devices on a computer screen.

RTX graphics cards support the latest versions of DirectX, including DirectX 12, which provides improved performance and graphics quality compared to previous versions. DirectX 12 enables the graphics card to access more memory and compute resources, resulting in faster frame rates and smoother animations.

In summary, CUDA and DirectX are two important technologies that are utilized by RTX graphics cards to enhance their performance. CUDA enables the graphics card to perform complex calculations faster, while DirectX provides a range of APIs that enable high-performance multimedia on Windows-based computers. Together, these technologies help RTX graphics cards to deliver high-quality graphics and fast frame rates in a wide range of applications.

Comparison of CUDA and DirectX in RTX and GTX Graphics Cards

CUDA and DirectX are two different technologies that are used in graphics cards to improve their performance and capabilities. CUDA, which stands for Compute Unified Device Architecture, is a parallel computing platform and programming model that is used by NVIDIA to create powerful parallel computing applications. DirectX, on the other hand, is a collection of application programming interfaces (APIs) that are used by Microsoft to create and run high-performance games and multimedia applications.

In terms of RTX and GTX graphics cards, both of these technologies are used to improve the performance of the graphics card. However, there are some key differences between how they are implemented in each type of card.

- CUDA: CUDA is used in RTX graphics cards to enable ray tracing, which is a technology that allows for more realistic lighting and shadows in games and other graphics applications. CUDA also allows for more efficient parallel computing, which can improve the performance of applications that require a lot of computation, such as video editing and scientific simulations. In addition, RTX graphics cards have dedicated hardware for running CUDA applications, which can improve their performance compared to GTX graphics cards.

- DirectX: DirectX is used in both RTX and GTX graphics cards to improve the performance of games and other multimedia applications. However, there are some key differences in how DirectX is implemented in each type of card. For example, RTX graphics cards may have better support for DirectX 12, which is the latest version of the DirectX API and provides improved performance and features compared to previous versions. Additionally, RTX graphics cards may have better support for DirectX Raytracing, which is a technology that allows for more realistic lighting and shadows in games and other graphics applications.

Overall, while both CUDA and DirectX are used in RTX and GTX graphics cards to improve their performance, there are some key differences in how they are implemented and used in each type of card. RTX graphics cards are specifically designed to take advantage of CUDA and other advanced technologies, while GTX graphics cards are more focused on providing reliable performance at a lower cost.

CUDA and DirectX in GTX Graphics Cards

Introduction to CUDA and DirectX

- CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA.

- DirectX is a collection of application programming interfaces (APIs) developed by Microsoft for handling multimedia tasks, including video game programming.

GTX Graphics Cards and CUDA

- GTX graphics cards are equipped with NVIDIA GPUs that support CUDA technology.

- CUDA allows GTX graphics cards to leverage the parallel processing power of their GPUs to accelerate computationally intensive tasks.

- CUDA is commonly used in applications such as video editing, scientific simulations, and artificial intelligence.

GTX Graphics Cards and DirectX

- GTX graphics cards are compatible with DirectX and can utilize its APIs for enhanced graphics performance in games and other multimedia applications.

- DirectX provides a standardized interface for developers to access hardware-accelerated rendering, video decoding, and audio processing on Windows systems.

- GTX graphics cards can take advantage of DirectX features such as tessellation, multi-threading, and real-time ray tracing to enhance gaming and multimedia experiences.

Comparison of CUDA and DirectX in GTX Graphics Cards

- CUDA and DirectX are complementary technologies that work together to optimize the performance of GTX graphics cards in various applications.

- While CUDA enables GTX graphics cards to perform general-purpose computing tasks, DirectX is primarily focused on gaming and multimedia.

- Both CUDA and DirectX contribute to the overall capabilities of GTX graphics cards, allowing them to deliver superior performance in a wide range of applications.

While both RTX and GTX graphics cards use the same underlying DirectX technology for rendering graphics, there are key differences in how they utilize it. One of the most significant differences lies in the use of CUDA and DirectX together.

CUDA, or Compute Unified Device Architecture, is a parallel computing platform and programming model developed by NVIDIA for its GPUs. It enables developers to write parallel algorithms that can be executed on NVIDIA GPUs, making it easier to take advantage of their parallel processing capabilities. CUDA is a proprietary technology, meaning it is only available on NVIDIA GPUs.

DirectX is a collection of application programming interfaces (APIs) developed by Microsoft for handling multimedia tasks, including game and video rendering, on Microsoft Windows. It provides a standardized way for developers to access hardware capabilities, such as graphics processing units (GPUs), and allows them to write platform-agnostic code. DirectX is included with Windows and is used by both AMD and NVIDIA GPUs.

Comparison of CUDA and DirectX in RTX and GTX Graphics Cards

While both RTX and GTX graphics cards use DirectX for rendering graphics, the difference lies in how they utilize CUDA. RTX graphics cards feature dedicated RT cores and Tensor cores that can take advantage of NVIDIA’s proprietary CUDA technology. These cores are designed to accelerate the performance of certain types of workloads, such as ray tracing and AI-accelerated rendering.

On the other hand, GTX graphics cards do not have dedicated RT or Tensor cores, and therefore cannot take advantage of CUDA for ray tracing or AI-accelerated rendering. However, they can still utilize CUDA for general-purpose parallel computing tasks, such as scientific simulations and financial modeling.

In summary, while both RTX and GTX graphics cards use DirectX for rendering graphics, RTX graphics cards have the added benefit of being able to utilize CUDA for accelerating specific types of workloads.

Importance of CUDA and DirectX for Architectural Visualization

CUDA and DirectX are two essential technologies that play a crucial role in architectural visualization. They provide the necessary tools and frameworks for rendering complex 3D models and simulations. In this section, we will discuss the importance of CUDA and DirectX for architectural visualization.

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA. It allows software developers to utilize the power of GPUs (Graphics Processing Units) for general-purpose computing, beyond just graphics rendering. CUDA enables the execution of parallel algorithms on the GPU, which can significantly accelerate the processing of large datasets and complex computations.

In architectural visualization, CUDA is used to offload the computational workload from the CPU to the GPU, freeing up the CPU for other tasks. This results in faster rendering times and improved performance. Many architectural visualization software applications, such as Autodesk 3ds Max and Blender, support CUDA acceleration, providing a significant boost in rendering speed and efficiency.

DirectX is a collection of application programming interfaces (APIs) developed by Microsoft for handling multimedia tasks, including game development and 3D graphics rendering. DirectX provides a standardized way for software developers to access hardware components, such as GPUs and sound cards, on Windows-based systems. It also includes features like hardware-accelerated rendering, shader support, and multi-threading, which enhance the performance of 3D graphics and simulations.

In architectural visualization, DirectX plays a critical role in providing a smooth and efficient rendering experience. Many popular visualization software applications, such as Autodesk Revit and SketchUp, utilize DirectX for hardware-accelerated rendering, ensuring that the graphics are rendered quickly and accurately. DirectX also supports advanced features like multi-threading, which enables parallel processing of data, leading to faster rendering times and improved performance.

In summary, CUDA and DirectX are essential technologies for architectural visualization. They provide the necessary tools and frameworks for rendering complex 3D models and simulations, enabling software developers to utilize the power of GPUs for general-purpose computing. By leveraging CUDA and DirectX, architectural visualization software applications can achieve faster rendering times, improved performance, and smoother graphics, ultimately enhancing the overall visualization experience for architects, designers, and clients alike.

Shader Clock and Memory Capacity

Overview of Shader Clock and Memory Capacity

The shader clock and memory capacity are two important architectural differences between RTX and GTX graphics cards.

- Shader Clock:

- The shader clock refers to the speed at which the graphics card executes shader instructions.

- Shader instructions are used to manipulate and transform data in the GPU’s memory.

- The higher the shader clock speed, the faster the graphics card can execute shader instructions.

- RTX graphics cards typically have higher shader clock speeds than GTX graphics cards.

- Memory Capacity:

- The memory capacity of a graphics card refers to the amount of memory available on the graphics card for storing data.

- The more memory a graphics card has, the more data it can store and the more complex scenes it can render.

- RTX graphics cards typically have more memory capacity than GTX graphics cards.

- This is because RTX graphics cards are designed for more demanding applications such as gaming and virtual reality, which require more memory to store complex data.

- Additionally, RTX graphics cards also have specialized memory for ray tracing and AI processing, which further increases their memory capacity.

The Impact of Shader Clock and Memory Capacity on Graphics Card Performance

The performance of graphics cards is influenced by various factors, including the shader clock and memory capacity. The shader clock refers to the speed at which the shader processors can execute instructions, while the memory capacity determines the amount of data that can be stored and processed by the graphics card.

The Role of Shader Clock in Graphics Card Performance

The shader clock plays a crucial role in determining the overall performance of a graphics card. It affects the speed at which the graphics card can process shader instructions, which are responsible for rendering images and animations. A higher shader clock speed translates to faster rendering times, resulting in smoother and more responsive graphics.

Factors Affecting Shader Clock Performance

Several factors can impact the performance of the shader clock, including the clock speed itself, the number of shader processors, and the memory bandwidth. The clock speed determines how many instructions the shader processors can execute per second, while the number of shader processors affects the parallel processing capabilities of the graphics card. The memory bandwidth, on the other hand, determines the rate at which data can be transferred between the graphics card and the system memory.

The Importance of Memory Capacity in Graphics Card Performance

Memory capacity is another critical factor that affects the performance of graphics cards. It determines the amount of data that can be stored and processed by the graphics card, which is essential for rendering complex scenes and animations. A graphics card with a larger memory capacity can handle more demanding tasks and provide smoother performance, especially when rendering high-resolution images or working with large datasets.

Factors Affecting Memory Capacity Performance

Several factors can impact the performance of memory capacity, including the size of the memory buffer, the memory type, and the memory interface. The size of the memory buffer determines the amount of data that can be stored on the graphics card, while the memory type and interface affect the speed at which data can be transferred between the graphics card and the system memory.

In summary, the shader clock and memory capacity are crucial factors that affect the performance of graphics cards. A graphics card with a higher shader clock speed and larger memory capacity can provide better performance and handle more demanding tasks, resulting in smoother and more responsive graphics.

Shader Clock and Memory Capacity in RTX Graphics Cards

One of the primary architectural differences between RTX and GTX graphics cards lies in their shader clock and memory capacity. While both types of cards are equipped with cutting-edge hardware, the RTX series boasts a few key advantages in these areas.

RTX graphics cards are designed with advanced RT cores and Tensor cores, which are specifically optimized for real-time ray tracing and AI-accelerated workloads. These cores are responsible for performing complex calculations that are essential for high-quality rendering and advanced lighting effects. As a result, RTX cards are capable of delivering smoother frame rates and more accurate reflections, refractions, and global illumination than GTX cards.

Memory Bandwidth

In addition to their powerful cores, RTX graphics cards also offer higher memory bandwidth compared to GTX cards. This means that RTX cards can transfer more data between the GPU and system memory per second, resulting in faster performance and smoother gameplay. This increased memory bandwidth is particularly beneficial for high-resolution gaming and demanding applications that require large amounts of data processing.

Memory Size and Type

Another factor to consider when comparing shader clock and memory capacity between RTX and GTX graphics cards is the size and type of memory. RTX cards typically come with larger memory sizes, such as 8GB or 16GB GDDR6, which provides more room for complex textures and geometry. In contrast, GTX cards may have smaller memory sizes, such as 4GB or 6GB GDDR5, which can limit their performance in memory-intensive applications.

Furthermore, RTX cards often feature faster memory speeds, which allows them to access and process data more quickly. This results in faster frame rates and smoother performance, especially in demanding scenarios such as high-resolution gaming or advanced rendering workloads.

Overall, the shader clock and memory capacity of RTX graphics cards offer several advantages over GTX cards. Their advanced RT cores, higher memory bandwidth, larger memory sizes, and faster memory speeds make them well-suited for demanding applications and high-performance gaming.

Comparison of Shader Clock and Memory Capacity in RTX and GTX Graphics Cards

When comparing the architectural differences between RTX and GTX graphics cards, it is essential to examine the shader clock and memory capacity. The shader clock is the speed at which the GPU can execute instructions in the shader units, while the memory capacity refers to the amount of video memory available on the graphics card.

Shader Clock

The shader clock speed is a critical factor in determining the performance of a graphics card. RTX graphics cards are typically equipped with a higher shader clock speed compared to GTX graphics cards. This means that RTX graphics cards can execute more instructions per second, resulting in faster performance. For instance, the NVIDIA GeForce RTX 3080 has a base clock speed of 1.47 GHz, while the GTX 1660 Super has a base clock speed of 1.695 MHz.

However, it is important to note that the shader clock speed is not the only factor that affects performance. Other factors such as the number of cores, memory bandwidth, and memory size also play a significant role in determining the overall performance of a graphics card.

Memory Capacity

The memory capacity of a graphics card is another crucial factor that affects its performance. RTX graphics cards typically have more memory capacity compared to GTX graphics cards. For example, the NVIDIA GeForce RTX 3080 has 10 GB of GDDR6X memory, while the GTX 1660 Super has 6 GB of GDDR6 memory.

Having more memory capacity allows the graphics card to handle more complex scenes and textures, resulting in better performance in memory-intensive applications such as gaming and video editing. However, it is important to note that the amount of memory is not the only factor that affects performance. Other factors such as memory bandwidth and memory type also play a significant role in determining the overall performance of a graphics card.

In summary, RTX graphics cards typically have a higher shader clock speed and more memory capacity compared to GTX graphics cards. However, it is important to consider other factors such as the number of cores, memory bandwidth, and memory type when comparing the performance of these graphics cards.

Shader Clock and Memory Capacity in GTX Graphics Cards

- GTX graphics cards utilize the CUDA architecture, which enables them to execute parallel computations efficiently.

- The shader clock speed in GTX graphics cards refers to the frequency at which the GPU’s shader processors can execute instructions.

- GTX graphics cards typically have a higher shader clock speed compared to RTX graphics cards, allowing for faster computation of graphics operations.

- However, RTX graphics cards are equipped with Tensor cores that enable AI-accelerated rendering, which can improve performance in certain scenarios.

- The memory capacity of GTX graphics cards refers to the amount of video memory available for storing and processing graphical data.

- GTX graphics cards typically have a lower memory capacity compared to RTX graphics cards, which may result in performance limitations when handling complex graphics tasks.

- However, GTX graphics cards are designed to be more cost-effective, making them an attractive option for budget-conscious consumers.

When comparing the architectural differences between RTX and GTX graphics cards, it is essential to examine the shader clock and memory capacity. The shader clock is the speed at which the shader processors in the graphics card execute instructions, while the memory capacity refers to the amount of memory available on the graphics card.

The shader clock speed is measured in MHz (megahertz) and represents the number of instructions that the shader processors can execute per second. RTX graphics cards typically have a higher shader clock speed compared to GTX graphics cards, which means they can perform more instructions per second. This translates to better performance in applications that require intensive shader processing, such as gaming, video editing, and 3D modeling.

In contrast, GTX graphics cards may have a lower shader clock speed, which can result in lower performance in shader-intensive applications. However, it is important to note that other factors, such as the number of shader processors and the memory bandwidth, can also affect performance.

The memory capacity of a graphics card refers to the amount of memory available on the card, measured in GB (gigabytes). RTX graphics cards typically have more memory capacity compared to GTX graphics cards, which means they can handle more complex models and textures. This is particularly important in applications such as 3D modeling and video editing, where large models and high-resolution textures are common.

On the other hand, GTX graphics cards may have less memory capacity, which can limit their performance in applications that require a large amount of memory. However, it is important to note that other factors, such as the memory bandwidth and the number of memory channels, can also affect memory performance.

In summary, the shader clock and memory capacity are important factors to consider when comparing the architectural differences between RTX and GTX graphics cards. RTX graphics cards typically have a higher shader clock speed and more memory capacity, which can result in better performance in shader-intensive applications. However, other factors can also affect performance, and it is important to consider these factors when selecting a graphics card for a particular application.

Importance of Shader Clock and Memory Capacity for Architectural Visualization

Shader clock and memory capacity are crucial factors in determining the performance of graphics cards, particularly in architectural visualization. The shader clock speed, measured in MHz, refers to the frequency at which the graphics card executes shader instructions. It is a critical determinant of the card’s ability to handle complex graphical tasks, such as rendering detailed 3D models.

Memory capacity, on the other hand, determines the amount of data that can be stored on the graphics card. In architectural visualization, this capacity is essential for handling large datasets, such as textures, materials, and lighting information. The more memory a graphics card has, the more efficiently it can process and store this data, resulting in smoother performance and faster rendering times.

Both shader clock speed and memory capacity are important factors to consider when selecting a graphics card for architectural visualization. A higher shader clock speed and larger memory capacity can help ensure that the card can handle the demands of complex 3D models and large datasets, resulting in more realistic and detailed renders.

Recommendations for Choosing the Right Graphics Card for Architectural Visualization

When it comes to choosing the right graphics card for architectural visualization, there are several factors to consider. The following recommendations can help guide you in making an informed decision:

- Consider the specific requirements of your project: The graphics card you choose should be able to handle the complexity and size of your models. If you work with large, highly detailed models, you may need a more powerful graphics card with a higher shader clock and more memory capacity.

- Evaluate your budget: Graphics cards can vary widely in price, so it’s important to choose one that fits within your budget while still meeting your performance needs.

- Take into account the software you use: Some software may be better suited to certain types of graphics cards. For example, if you use Autodesk Revit, you may want to consider a graphics card that offers optimized performance with this software.

- Look for compatibility with other components: Make sure the graphics card you choose is compatible with your other components, such as your motherboard and power supply.

- Consider the warranty and customer support: A good warranty and reliable customer support can be important factors in ensuring that you can get help if you have any issues with your graphics card.

By considering these factors and doing some research, you can choose the right graphics card for your architectural visualization needs.

Additional Resources for Graphics Card Architecture and Architectural Visualization

For those looking to dive deeper into the world of graphics card architecture and visualization, there are a plethora of resources available. These resources can provide a wealth of information on the inner workings of graphics cards, as well as offer insight into the intricacies of architectural design.

Some useful resources for exploring graphics card architecture include technical specifications and data sheets, which can be found on the websites of major graphics card manufacturers such as NVIDIA and AMD. These documents provide detailed information on the clock speeds, memory capacities, and other technical specifications of various graphics cards. Additionally, there are online forums and communities dedicated to graphics card enthusiasts, where users can discuss and share information on the latest developments in graphics card technology.

For those interested in architectural visualization, there are a variety of software tools and programs available that can help create realistic and detailed 3D models of buildings and other structures. These tools often require a powerful graphics card to operate effectively, making a high-end RTX or GTX graphics card a valuable investment for architects and designers.

In addition to software tools, there are also a number of online resources available for architectural visualization, including libraries of 3D models and textures, as well as online communities where users can share and discuss their work. By leveraging these resources, architects and designers can create stunning and accurate visualizations of their designs, providing valuable insights into the aesthetic and functional aspects of a building.

Overall, there are a wide variety of resources available for those interested in exploring the architectural differences between RTX and GTX graphics cards, as well as the broader world of graphics card technology and architectural visualization. By leveraging these resources, users can gain a deeper understanding of the inner workings of graphics cards, as well as the tools and techniques used to create stunning architectural visualizations.

FAQs

1. What is the difference between RTX and GTX graphics cards?

RTX and GTX are both series of graphics cards produced by NVIDIA, but they differ in their architecture and intended use. RTX (Ray Tracing Technology) graphics cards are designed for real-time ray tracing, which provides more accurate lighting and shadows in games and other graphics applications. GTX (GeForce Technology) graphics cards, on the other hand, are designed for general-purpose graphics processing and do not support real-time ray tracing.

2. Is real-time ray tracing important for architecture?

Real-time ray tracing can be useful for architects who work with 3D models and renderings, as it can provide more accurate lighting and shadows in their visualizations. However, it is not necessarily a requirement for all architectural projects, and the added cost of an RTX graphics card may not be justified for some users.

3. Are RTX graphics cards more powerful than GTX graphics cards?

In general, RTX graphics cards are more powerful than GTX graphics cards, particularly when it comes to real-time ray tracing. However, the performance difference may not be significant in some applications, and the added cost of an RTX graphics card may not be worth it for all users.

4. How do I decide which type of graphics card is right for my needs?

To decide which type of graphics card is right for your needs, consider the specific applications and tasks you will be using it for. If you work with 3D models and renderings and require accurate lighting and shadows, an RTX graphics card may be a good choice. If you do not require real-time ray tracing and are looking for a more affordable option, a GTX graphics card may be a better fit.

5. Can I use an RTX graphics card with an older CPU or motherboard?

It is generally possible to use an RTX graphics card with an older CPU or motherboard, but it may require a newer power supply and other components to meet the card’s power and cooling requirements. It is important to check the compatibility of all components before installing a new graphics card.