The Graphics Processing Unit (GPU) is an essential component of modern computing devices, from smartphones to high-performance gaming computers. But who designed the GPU? The history of GPU design is a fascinating story that involves some of the brightest minds in the world of technology. In this article, we will delve into the world of graphics card architecture and explore the contributions of the people who brought the GPU to life. From the early pioneers of computer graphics to the cutting-edge engineers of today, we will discover the key figures who have shaped the GPU as we know it today. So, let’s embark on a journey to uncover the story behind the GPU and the brilliant minds that brought it to life.

The Evolution of Graphics Card Design

The Early Years: From 2D to 3D Graphics

In the early days of computing, the primary focus of graphics cards was to provide 2D graphics for display devices such as monitors. The first graphics cards were simple hardware accelerators that could only render basic shapes and lines. As technology advanced, the demand for more sophisticated graphics grew, and thus began the evolution of graphics card design towards 3D graphics.

One of the earliest innovations in this field was the introduction of 3D hardware accelerators. These cards were capable of rendering 3D models and scenes, which opened up new possibilities for computer graphics. However, they were still limited in their capabilities and could only handle simple 3D objects.

Another significant development in the early years of 3D graphics was the introduction of the first 3D games. These games required more advanced graphics hardware, and as a result, game developers and hardware manufacturers worked together to create new graphics cards that could handle the demands of 3D gaming.

Despite these advancements, the first 3D graphics cards were still quite expensive and not widely adopted by the general public. It wasn’t until the mid-1990s that 3D graphics became more accessible with the introduction of more affordable graphics cards and the widespread adoption of 3D gaming.

Overall, the early years of graphics card design were marked by the gradual shift from 2D to 3D graphics. This transition required collaboration between hardware manufacturers, game developers, and other industry stakeholders to bring advanced 3D graphics to the masses.

The Rise of Programmable GPUs

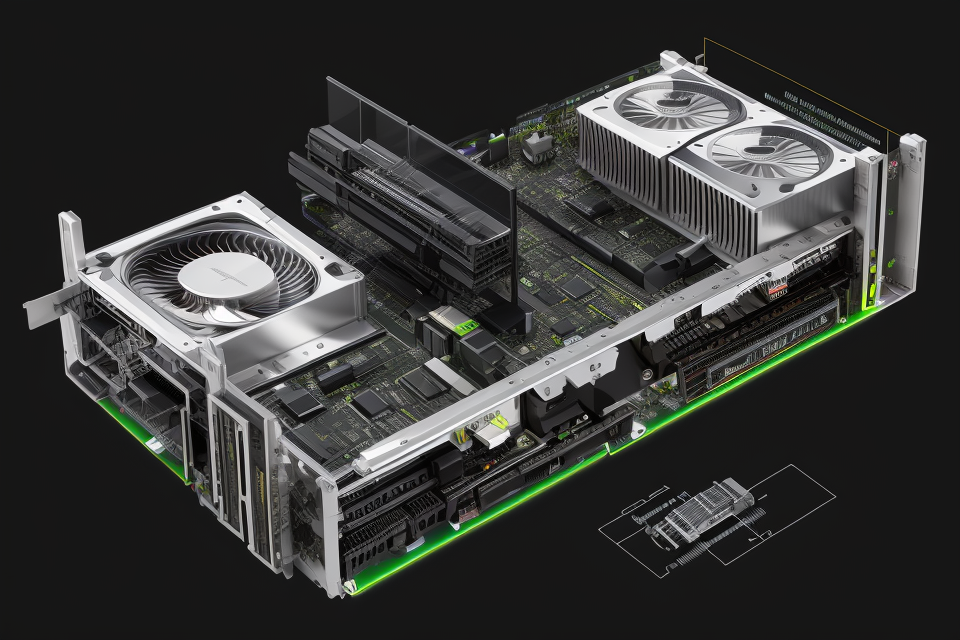

The graphics processing unit (GPU) has come a long way since its inception in the 1980s. From being a simple hardware component designed to render images on computer screens, the GPU has evolved into a highly sophisticated processor capable of handling complex computations and performing tasks that were once the exclusive domain of the central processing unit (CPU).

One of the most significant advancements in GPU design has been the rise of programmable GPUs. Traditional GPUs were designed to handle a specific set of operations, such as rendering images or decoding video. However, programmable GPUs, also known as general-purpose GPUs (GPGPUs), have the ability to execute a wide range of programming languages and perform a variety of tasks beyond graphics rendering.

The first programmable GPU was the NVIDIA GeForce 8 series, released in 2006. This GPU featured a new architecture called CUDA, which allowed developers to write programs in C, C++, and Fortran and run them on the GPU. This was a significant breakthrough as it enabled programmers to harness the power of the GPU for non-graphics applications, such as scientific simulations and financial modeling.

Since then, programmable GPUs have become increasingly popular, and most modern GPUs support some form of general-purpose programming. For example, NVIDIA’s Tesla series of GPUs are designed specifically for scientific computing and deep learning applications, while AMD’s Radeon Instinct series are aimed at data scientists and machine learning engineers.

The rise of programmable GPUs has had a profound impact on a wide range of industries, from finance and healthcare to automotive and entertainment. With their ability to perform complex computations at unprecedented speeds, programmable GPUs have enabled researchers to run simulations that were previously impossible, and they have enabled game developers to create more realistic and immersive worlds.

In conclusion, the rise of programmable GPUs has been a significant milestone in the evolution of graphics card design. By enabling programmers to harness the power of the GPU for a wide range of applications, programmable GPUs have revolutionized the way we think about computing and have opened up new possibilities for innovation and creativity.

The Emergence of AI-Assisted Design

Introduction to AI-Assisted Design

The advent of artificial intelligence (AI) has significantly transformed the world of graphics card design. With the emergence of AI-assisted design, graphics card architects have access to powerful tools that can optimize and streamline the design process. This section will delve into the specific ways AI is revolutionizing graphics card design, and the impact it has had on the industry.

How AI is Changing Graphics Card Design

AI-assisted design is changing the way graphics cards are designed in several key ways:

- Optimization of Performance: AI algorithms can analyze vast amounts of data to identify the most efficient designs for graphics cards. This enables architects to make more informed decisions about component selection and placement, resulting in more optimized performance.

- Simulation and Testing: AI can also be used to simulate and test various design configurations in a virtual environment. This allows architects to quickly test different design options and identify potential issues before physical prototypes are built.

- Automation of Repetitive Tasks: AI can automate repetitive tasks such as circuit routing and placement, freeing up time for architects to focus on more complex design decisions.

The Impact of AI-Assisted Design on the Industry

The impact of AI-assisted design on the graphics card industry has been significant. By streamlining the design process and enabling more efficient performance optimization, AI has allowed graphics card manufacturers to produce more powerful and reliable products in less time. Additionally, AI-assisted design has enabled greater collaboration between different teams within a company, as well as between companies, making the design process more efficient and effective.

In conclusion, the emergence of AI-assisted design has revolutionized the world of graphics card architecture. By enabling more efficient performance optimization, simulation, and testing, as well as automating repetitive tasks, AI has enabled graphics card manufacturers to produce more powerful and reliable products in less time.

The Major Players in GPU Design

NVIDIA: A Pioneer in Graphics Card Design

NVIDIA, founded in 1993 by Jensen Huang, Chris Malachowsky, and Curtis Priem, has been a dominant force in the graphics processing unit (GPU) market. The company’s focus on cutting-edge graphics technology has led to the development of many groundbreaking products. NVIDIA’s dedication to pushing the boundaries of GPU design has made it a pioneer in the field.

NVIDIA’s commitment to innovation can be seen in its history of introducing industry-leading products. In 1999, the company released the NVIDIA GeForce 256, the world’s first 3D graphics processing unit (GPU). This product was a significant advancement in graphics processing, as it offered real-time 3D graphics capabilities that were previously unattainable.

Throughout the years, NVIDIA has continued to introduce innovative products that have shaped the graphics card market. The NVIDIA GeForce 6 series, released in 2004, featured the first unified shader architecture, allowing for more efficient processing of graphics and computation tasks. This technology has since become a staple in GPU design.

NVIDIA’s commitment to advancing GPU technology has also been evident in its support for industry standards. The company has played a crucial role in the development and adoption of various industry standards, such as DirectX and OpenGL. These standards help ensure compatibility and interoperability between different hardware and software products.

The company’s dedication to open standards has also led to the development of NVIDIA’s CUDA platform. CUDA allows developers to utilize NVIDIA GPUs for general-purpose computing, opening up new possibilities for applications that can leverage the power of GPUs beyond traditional graphics rendering.

NVIDIA’s influence in the GPU market has led to a thriving ecosystem of hardware and software partners. The company’s products are widely used in various industries, including gaming, professional visualization, and deep learning. The popularity of NVIDIA GPUs has made them a preferred choice for both enthusiasts and professionals seeking the best performance and reliability.

In conclusion, NVIDIA’s contributions to the world of GPU design have been instrumental in shaping the industry. The company’s commitment to innovation, industry standards, and open platforms has enabled it to remain at the forefront of GPU technology. NVIDIA’s impact on the graphics card market has been significant, and its influence continues to drive advancements in the field.

AMD: A Formidable Force in GPU Development

AMD, or Advanced Micro Devices, has been a dominant player in the world of GPU design. Founded in 1969, the company has a long history of innovation and has been responsible for some of the most significant advancements in the field of graphics processing units (GPUs).

One of AMD’s most notable contributions to the world of GPUs was the introduction of the first 3D graphics processor, the AMD Radeon 8500, in 1999. This groundbreaking product set a new standard for 3D graphics performance and helped to establish AMD as a major player in the GPU market.

Since then, AMD has continued to push the boundaries of GPU design, developing a wide range of products that cater to a variety of different markets and applications. From high-end gaming GPUs to powerful professional graphics cards, AMD has consistently delivered innovative solutions that have helped to drive the growth and development of the graphics card industry.

One of AMD’s most recent contributions to the world of GPUs was the introduction of the Radeon Instinct MI25, a powerful graphics card designed specifically for artificial intelligence and machine learning applications. This cutting-edge product showcases AMD’s commitment to innovation and its ability to develop products that meet the evolving needs of the market.

Overall, AMD’s rich history of GPU design and its ongoing commitment to innovation make it a formidable force in the world of graphics card architecture.

The Role of Third-Party Manufacturers

The third-party manufacturers play a crucial role in the GPU design ecosystem. These companies are responsible for producing the physical products that integrate the GPU designs created by the primary manufacturers. They specialize in the fabrication and assembly of the components, ensuring that the final product meets the required specifications.

There are several key third-party manufacturers in the GPU design landscape. One of the most prominent is TSMC (Taiwan Semiconductor Manufacturing Company), which is the world’s largest dedicated independent semiconductor foundry. TSMC manufactures chips for many of the leading GPU designers, including NVIDIA and AMD.

Another significant third-party manufacturer is GlobalFoundries, a leading semiconductor foundry that offers a broad range of manufacturing technologies and services. GlobalFoundries works with various GPU designers to produce their products, providing the necessary manufacturing capabilities to bring their designs to life.

Samsung is another prominent player in the third-party manufacturing space. While Samsung is primarily known for its electronics and technology products, the company also manufactures chips for GPU designers. Samsung’s expertise in the fabrication process ensures that the chips are of the highest quality and meet the specific requirements of the GPU designers.

In addition to these major players, there are several other third-party manufacturers that contribute to the GPU design ecosystem. These companies provide the necessary manufacturing capabilities to bring the designs of the primary GPU designers to life. Without the support of these third-party manufacturers, the primary GPU designers would not be able to produce the high-quality products that are essential to the gaming and graphics industry.

The Technologies That Drive GPU Design

CUDA and OpenCL: Programming Languages for GPUs

Introduction to CUDA and OpenCL

CUDA and OpenCL are two programming languages specifically designed for graphics processing units (GPUs). These languages allow developers to utilize the immense parallel processing capabilities of GPUs to accelerate computations and enhance overall system performance.

Differences Between CUDA and OpenCL

While both CUDA and OpenCL are designed for GPU programming, they have distinct differences in terms of their development environment, hardware support, and application domains.

CUDA

CUDA (Compute Unified Device Architecture) is a proprietary programming language developed by NVIDIA, a leading manufacturer of GPUs. CUDA is primarily used for developing applications that can leverage the parallel processing capabilities of NVIDIA GPUs. CUDA offers a comprehensive development environment with tools for code development, debugging, and optimization. It also provides access to a wide range of NVIDIA GPUs, making it ideal for applications that require high-performance graphics and computational power.

OpenCL

OpenCL (Open Computing Language) is an open-source, standardized programming language for GPUs. It is designed to be platform-independent, meaning that it can be used on a variety of hardware platforms, including those from AMD, Intel, and NVIDIA. OpenCL provides a unified programming model for developers, enabling them to write a single program that can be executed on different hardware architectures. This flexibility makes OpenCL a popular choice for applications that require cross-platform compatibility and support.

Comparison of CUDA and OpenCL

When comparing CUDA and OpenCL, it is essential to consider factors such as performance, development complexity, and hardware support.

Performance

In terms of performance, CUDA generally offers better optimization and execution speed for NVIDIA GPUs. This is because NVIDIA designs its GPUs to work seamlessly with CUDA, resulting in better memory management and lower overhead. However, OpenCL’s platform independence makes it a more versatile choice for applications that require support for multiple hardware platforms.

Development Complexity

CUDA has a steeper learning curve due to its proprietary nature and the need to use NVIDIA’s development tools. OpenCL, on the other hand, is more straightforward to learn and use, as it follows a standardized programming model. Developers can use a variety of tools and frameworks for OpenCL development, making it more accessible to a broader audience.

Hardware Support

CUDA is primarily associated with NVIDIA GPUs, while OpenCL is designed to work with a range of hardware platforms, including those from AMD and Intel. This means that OpenCL offers greater flexibility in terms of the GPUs that can be used with an application. However, CUDA’s close integration with NVIDIA GPUs often results in better performance and optimized code.

In conclusion, the choice between CUDA and OpenCL depends on the specific requirements of an application and the hardware platform being used. Developers should consider factors such as performance, development complexity, and hardware support when deciding which programming language to use for their GPU-accelerated applications.

Ray Tracing: The Future of Realistic Rendering

Ray tracing is a technology that simulates the behavior of light in a virtual environment, creating highly realistic and accurate reflections, shadows, and lighting effects. It has become a crucial component in modern graphics card design, offering a more natural and realistic rendering experience for users.

One of the main advantages of ray tracing is its ability to produce accurate reflections and refractions of light. This is achieved by tracing the path of each individual ray of light as it interacts with objects in the virtual environment, taking into account factors such as material properties, surface roughness, and the position and intensity of light sources. As a result, ray tracing can produce highly realistic reflections, refractions, and other lighting effects that are difficult or impossible to achieve with traditional rendering techniques.

Another key benefit of ray tracing is its ability to produce highly accurate shadows. By simulating the interaction of light with objects in the virtual environment, ray tracing can produce soft, realistic shadows that accurately reflect the position and intensity of light sources. This can help to create a more immersive and believable virtual environment, as shadows play a crucial role in how we perceive and interact with our surroundings.

In addition to its benefits for rendering and shading, ray tracing also plays an important role in other areas of graphics card design, such as anti-aliasing and motion blur. By simulating the movement of light over time, ray tracing can produce smoother, more natural-looking motion blur effects, reducing the “stroboscopic” effect that can occur with traditional rendering techniques.

Overall, ray tracing is a powerful technology that is driving the future of realistic rendering in graphics card design. By simulating the behavior of light in a virtual environment, it offers a more natural and accurate rendering experience for users, with benefits for shading, reflections, refractions, shadows, and motion blur. As graphics card technology continues to evolve, it is likely that ray tracing will play an increasingly important role in enabling even more realistic and immersive virtual environments.

Machine Learning Acceleration: GPUs for AI

The integration of machine learning acceleration into GPUs has significantly expanded their utility beyond graphics rendering. GPUs are now instrumental in the training and execution of artificial intelligence (AI) models. This transformation has been made possible by the following factors:

Parallel Processing Capabilities

GPUs are designed with a large number of processing cores that can operate in parallel. This architecture allows them to handle a multitude of tasks simultaneously, making them an ideal choice for AI applications that require intensive computations.

Specialized AI Accelerators

GPUs now come equipped with specialized AI accelerators, such as tensor cores, that are designed to speed up matrix multiplications and other mathematical operations crucial to AI algorithms. These accelerators enable GPUs to deliver high-performance results for AI tasks, making them an essential component in the field of AI research and development.

Open Source Software Support

GPU manufacturers have invested in developing open-source software tools that allow developers to leverage the full potential of GPUs for AI workloads. Frameworks like TensorFlow, PyTorch, and Caffe provide developers with high-level APIs to easily deploy and train AI models on GPUs, simplifying the process and making it more accessible to a wider audience.

Hardware and Software Co-Design

The close collaboration between hardware and software engineers has led to the development of GPUs that are specifically optimized for AI workloads. This co-design approach ensures that the hardware and software components work seamlessly together, delivering optimal performance and efficiency for AI applications.

Memory Subsystem Optimizations

GPUs are designed with advanced memory subsystems that facilitate the efficient transfer of data between the CPU and GPU. This ensures that AI models can be trained and executed smoothly, without being hindered by memory bottlenecks or data transfer delays.

Power Efficiency

GPUs are designed to be highly power-efficient, which is crucial for AI applications that require extensive computational resources. This efficiency ensures that AI models can be trained and executed with minimal energy consumption, making GPUs an attractive option for organizations seeking to minimize their carbon footprint while still benefiting from advanced AI capabilities.

By leveraging these technologies, GPUs have emerged as a powerful tool for AI research and development. Their ability to handle complex computations, combined with their specialized AI accelerators and software support, has made them an indispensable component in the world of AI.

The Impact of GPU Design on Modern Computing

Gaming: The Most Visible Beneficiary of GPU Innovation

The gaming industry has been one of the most significant beneficiaries of GPU innovation. With the advent of advanced graphics cards, game developers have been able to create more visually stunning and immersive gaming experiences. The following are some of the ways in which GPU innovation has impacted the gaming industry:

- Improved graphics: With the advent of advanced GPUs, game developers have been able to create more visually stunning games with higher resolutions, more detailed textures, and more advanced lighting effects. This has helped to create a more immersive gaming experience for players, allowing them to feel like they are truly a part of the game world.

- Increased frame rates: One of the most important benefits of GPU innovation for gamers is the ability to run games at higher frame rates. This means that games are smoother and more responsive, making them more enjoyable to play.

- Advanced physics and AI: GPUs have also made it possible for game developers to incorporate more advanced physics and AI into their games. This has helped to create more realistic and dynamic game worlds, where everything from the behavior of individual characters to the movement of entire environments is driven by complex algorithms.

- Virtual reality: GPU innovation has also played a key role in the development of virtual reality (VR) technology. By providing the processing power needed to render complex VR environments in real-time, GPUs have made it possible for players to experience truly immersive VR games and other applications.

Overall, the impact of GPU innovation on the gaming industry has been enormous. From improved graphics and increased frame rates to advanced physics and AI and virtual reality, GPUs have made it possible for game developers to create more immersive and engaging gaming experiences than ever before.

The Role of GPUs in Scientific Research and Simulation

GPUs have revolutionized the field of scientific research and simulation by providing an efficient and cost-effective solution for high-performance computing. Here are some ways in which GPUs have impacted scientific research and simulation:

Accelerating Scientific Simulations

One of the most significant applications of GPUs in scientific research is in simulating complex physical phenomena. Scientists use computational models to simulate the behavior of materials, fluids, and other physical systems. These simulations require large amounts of computation, which can be provided by GPUs.

GPUs are particularly well-suited for these simulations because they can perform many calculations simultaneously. This is known as parallel processing, and it allows GPUs to perform complex calculations much faster than traditional CPUs. As a result, scientists can run simulations that were previously too complex or time-consuming to run on a CPU.

Advancing Machine Learning and Artificial Intelligence

GPUs have also played a significant role in advancing machine learning and artificial intelligence. Machine learning algorithms require large amounts of data to be processed quickly, and GPUs are ideally suited for this task. GPUs can perform multiple parallel calculations at once, making them ideal for training neural networks, which are the basis for many machine learning algorithms.

Accelerating Climate Modeling

Climate modeling is another area where GPUs have had a significant impact. Climate models require massive amounts of computation to simulate the complex interactions between the atmosphere, oceans, and land. GPUs can accelerate these simulations by performing multiple calculations simultaneously, allowing scientists to run more complex models and make more accurate predictions about future climate conditions.

In summary, GPUs have revolutionized scientific research and simulation by providing an efficient and cost-effective solution for high-performance computing. By accelerating scientific simulations, advancing machine learning and artificial intelligence, and accelerating climate modeling, GPUs have enabled scientists to tackle some of the most complex problems facing society today.

The Future of GPUs: More Power, Less Energy Consumption

The future of GPUs is bright, with manufacturers continuously striving to develop more powerful and energy-efficient designs. The following are some of the advancements and innovations that are shaping the future of GPUs:

Advancements in Material Science

One of the key areas of research is the development of new materials with better thermal conductivity and electrical properties. These materials can help improve the efficiency of the GPU and reduce its power consumption.

3D Stacking Technology

3D stacking technology allows for the integration of multiple layers of transistors and other components, resulting in a more compact and efficient design. This technology is expected to play a significant role in the development of future GPUs, as it can help reduce power consumption and increase performance.

Quantum Computing

Quantum computing is an emerging field that has the potential to revolutionize computing. Quantum computers can solve certain problems much faster than classical computers, and this technology could be used to develop more powerful GPUs in the future.

Machine Learning and AI

Machine learning and AI are becoming increasingly important in the field of computer graphics, and this trend is expected to continue in the future. As AI algorithms become more sophisticated, they will be able to handle more complex tasks, resulting in more realistic and immersive graphics.

Neural Network Acceleration

Neural networks are a type of machine learning algorithm that is used in a wide range of applications, from image recognition to natural language processing. In the future, GPUs will be designed to accelerate neural network processing, making these applications even more efficient and powerful.

Overall, the future of GPUs is exciting, with new advancements and innovations being developed all the time. As these technologies continue to evolve, we can expect to see more powerful and energy-efficient GPUs that will drive the development of new and exciting applications in the world of computer graphics.

FAQs

1. Who designed the first GPU?

The first GPU was designed by a team of engineers at NVIDIA, led by Jen-Hsun Huang. The GPU was called the NVIDIA GeForce 256 and was released in 1999. It was a revolutionary product that greatly improved the performance of 3D graphics in computer games and other applications.

2. Who invented the concept of the GPU?

The concept of the GPU was invented by John L. Hennessy and David A. Patterson in the 1980s. They proposed the idea of a specialized processor designed specifically for handling the complex mathematical calculations required for 3D graphics and other compute-intensive tasks. This concept was later implemented by NVIDIA and other companies in the form of the modern GPU.

3. Who is responsible for the development of modern GPUs?

Modern GPUs have been developed by a number of companies, including NVIDIA, AMD, and Intel. These companies have been responsible for advancing the technology and increasing the performance of GPUs over the years. They have also worked to improve the energy efficiency of GPUs, making them more environmentally friendly.

4. Who uses GPUs?

GPUs are used by a wide range of industries and applications, including gaming, entertainment, healthcare, and scientific research. They are particularly useful for tasks that require large amounts of data processing, such as deep learning and scientific simulations. GPUs are also used in data centers and cloud computing environments to provide high-performance computing resources to a wide range of users.

5. Who is the leader in the GPU market?

As of 2021, NVIDIA is the leader in the GPU market, with a market share of around 80%. The company has a strong reputation for producing high-quality graphics cards and has consistently released innovative products that have set the standard for the industry. AMD and Intel are also major players in the GPU market, but they have a smaller market share than NVIDIA.