Graphics cards are the heart of any gaming or graphics-intensive computer system. The architecture of a graphics card determines its performance, speed, and functionality. The architecture of a graphics card is the blueprint that defines how the card is designed and how its components work together. In this article, we will explore the different architectures used by graphics cards and their features. We will also discuss the impact of architecture on the performance of graphics cards and how it affects the overall gaming experience. So, if you’re a gamer or a tech enthusiast, read on to discover the world of graphics card architecture.

Graphics cards use a variety of architectures, including GPU (Graphics Processing Unit), APU (Accelerated Processing Unit), and NPU (Neural Processing Unit). GPUs are specifically designed for handling graphics and computational tasks, while APUs combine CPU and GPU capabilities in a single chip for more efficient processing. NPUs are designed for handling AI and machine learning tasks. The choice of architecture depends on the specific requirements of the application or task being performed.

Graphics Card Architecture Overview

Evolution of Graphics Card Architecture

From 2D to 3D Acceleration

The evolution of graphics card architecture can be traced back to the early days of computing when graphics were primarily 2D in nature. In the 1980s, the first graphics cards were introduced that could accelerate 2D graphics and improve the display of text and simple graphics on the screen. These early graphics cards were primarily used for CAD and engineering applications.

From Integrated to Dedicated Graphics

In the 1990s, graphics cards evolved to include dedicated graphics processing units (GPUs) that were specifically designed to accelerate 3D graphics. These GPUs were more powerful than the integrated graphics found in CPUs and were able to render complex 3D scenes in real-time. As a result, they became an essential component for gaming and other 3D applications.

From AGP to PCIe

The advancement of graphics card architecture was also marked by the transition from the Advanced Graphics Port (AGP) to the Peripheral Component Interconnect Express (PCIe) interface. AGP was introduced in the late 1990s as a high-speed interface specifically designed for graphics cards. However, with the advent of PCIe, graphics cards were able to take advantage of faster data transfer rates and improved bandwidth, leading to even greater performance gains.

Today, graphics cards continue to evolve with new architectures and technologies being introduced regularly. For example, NVIDIA’s CUDA architecture and AMD’s Radeon Instinct MI25 are two examples of modern graphics card architectures that are designed to deliver cutting-edge performance for AI, deep learning, and other compute-intensive workloads.

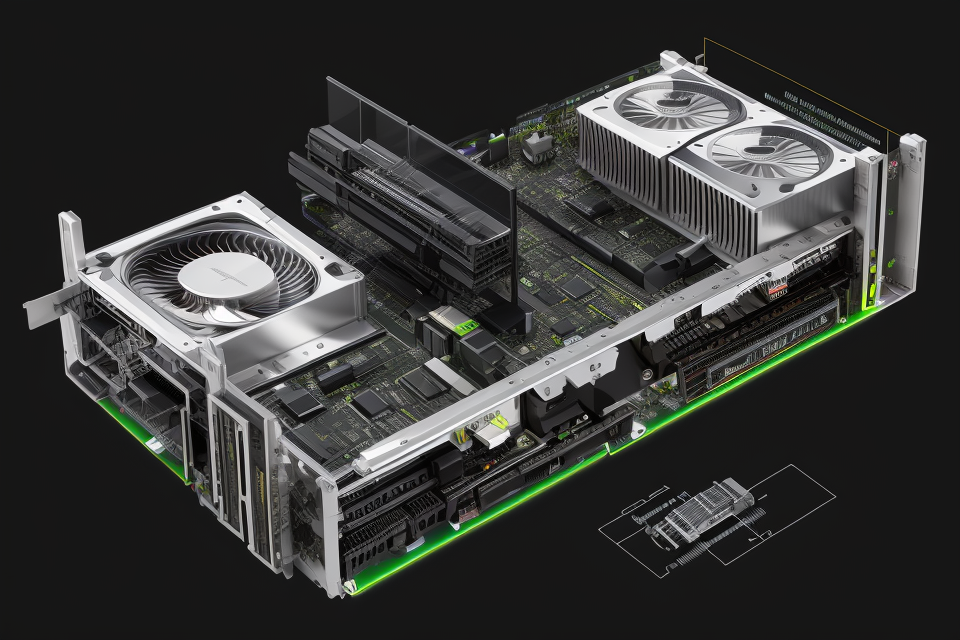

Components of Graphics Card Architecture

The graphics card architecture is the foundation of a graphics card’s functionality. It consists of several key components that work together to deliver high-quality graphics and video output. Here are some of the essential components of graphics card architecture:

Graphics Processing Unit (GPU)

The GPU is the primary component of a graphics card. It is responsible for rendering images and animations on the screen. The GPU is designed to handle complex mathematical calculations that are required for rendering graphics. The performance of a graphics card is directly proportional to the performance of its GPU.

Video Random Access Memory (VRAM)

VRAM is a type of memory that is used to store graphical data while it is being rendered on the screen. It is essential for handling large and complex graphics projects. The amount of VRAM on a graphics card determines how much graphical data it can store at once. The more VRAM a graphics card has, the more complex graphics it can handle.

Digital Visual Interface (DVI)

DVI is a digital interface that is used to transmit digital video signals from a computer to a display device. It is used to connect a graphics card to a monitor or a projector. DVI is known for its high-quality video output and its ability to transmit video signals over long distances without any loss of quality.

High-Definition Multimedia Interface (HDMI)

HDMI is a digital interface that is used to transmit both audio and video signals from a computer to a display device. It is an all-in-one interface that eliminates the need for multiple cables. HDMI is known for its high-quality audio and video output and its ability to transmit signals over long distances without any loss of quality.

In summary, the components of graphics card architecture are the GPU, VRAM, DVI, and HDMI. These components work together to deliver high-quality graphics and video output. The performance of a graphics card is directly proportional to the performance of its GPU and the amount of VRAM it has. DVI and HDMI are used to transmit digital video signals from a computer to a display device, ensuring high-quality video output.

Graphics Card Architecture for Gaming

Graphics Processing Unit (GPU)

The Graphics Processing Unit (GPU) is a specialized microprocessor designed to accelerate the creation and display of images in a wide range of applications, including gaming. The GPU is responsible for rendering images, manipulating pixels, and performing complex mathematical calculations in real-time.

Functions of a GPU

- Rendering: The GPU is responsible for rendering images and animations in real-time. It takes the raw data from the CPU and converts it into a visual representation that can be displayed on the screen.

- Texture Mapping: Texture mapping is the process of applying a 2D image to a 3D object. The GPU performs this function by applying the appropriate texture to each polygon of a 3D model, creating a more realistic and visually appealing image.

- Lighting and Shadows: The GPU is responsible for simulating lighting and shadows in a scene. This includes calculating the interaction between light sources and objects, as well as creating realistic shadows and reflections.

- Transformation and Translation: The GPU performs the transformation and translation of objects in a scene. This includes scaling, rotating, and moving objects in 3D space.

- Anti-Aliasing: Anti-aliasing is a technique used to smooth out jagged edges in graphics. The GPU performs this function by interpolating colors between pixels, creating a smoother and more visually appealing image.

Importance of a GPU for gaming

The GPU is an essential component for gaming, as it is responsible for rendering high-quality graphics and animations in real-time. A powerful GPU can handle complex graphics and mathematical calculations, resulting in smoother frame rates, higher resolutions, and more realistic graphics.

Types of GPUs

There are several types of GPUs available, including:

- Integrated GPUs: Integrated GPUs are built into the CPU and share memory with the CPU. They are typically less powerful than dedicated GPUs but are sufficient for basic tasks such as web browsing and office applications.

- Dedicated GPUs: Dedicated GPUs are separate from the CPU and have their own memory. They are more powerful than integrated GPUs and are designed for tasks such as gaming, video editing, and other graphics-intensive applications.

- Mobile GPUs: Mobile GPUs are designed for use in laptops and other portable devices. They are typically less powerful than desktop GPUs but are designed to be more power-efficient.

- Workstation GPUs: Workstation GPUs are designed for use in professional applications such as 3D modeling, engineering, and scientific simulations. They are typically more powerful than gaming GPUs and are designed for high-performance computing.

Video Random Access Memory (VRAM)

- Functions of VRAM

- Rendering 3D models and scenes

- Storing and manipulating textures

- Temporarily storing data during rendering

- Importance of VRAM for gaming

- VRAM allows for smoother frame rates and faster load times

- Increased VRAM can handle more complex 3D models and textures

- More VRAM allows for more detailed and higher resolution textures

- Types of VRAM

- Dynamic Random Access Memory (DRAM)

- Static Random Access Memory (SRAM)

- Video Memory Technology (VMT)

- Double Data Rate (DDR) SDRAM

DVI and HDMI

Differences between DVI and HDMI

DVI (Digital Visual Interface) and HDMI (High-Definition Multimedia Interface) are two popular digital interfaces used for transmitting audio and video signals from a graphics card to a display device. While both DVI and HDMI are designed to deliver high-quality audio and video signals, there are some differences between the two interfaces.

One of the main differences between DVI and HDMI is the type of signal they transmit. DVI is primarily designed to transmit digital video signals, while HDMI can transmit both digital and analog video signals. Additionally, DVI is typically used with a separate audio cable, while HDMI can transmit both audio and video signals over a single cable.

Another difference between DVI and HDMI is the level of signal degradation. DVI signals are less susceptible to signal degradation over long distances, while HDMI signals can experience signal degradation over longer cables. This can result in a decrease in picture quality when using HDMI over longer distances.

Importance of DVI and HDMI for gaming

For gamers, the choice between DVI and HDMI often comes down to personal preference and the specific needs of their gaming setup. Both interfaces can deliver high-quality audio and video signals, but the specific requirements of a gaming setup may dictate the use of one interface over the other.

In some cases, gamers may prefer DVI because it provides a cleaner, sharper picture with less signal degradation over long distances. In other cases, gamers may prefer HDMI because it can transmit both audio and video signals over a single cable, making it more convenient to set up.

How DVI and HDMI work with graphics cards

When it comes to graphics cards, both DVI and HDMI are commonly used to connect the graphics card to a display device. Graphics cards typically have one or more DVI or HDMI ports, which can be used to connect the graphics card to a monitor or TV.

To use DVI or HDMI with a graphics card, the first step is to connect the graphics card to the display device using the appropriate cable. Once the cable is connected, the graphics card will output the audio and video signals to the display device, which will then display the output on the screen.

Overall, the choice between DVI and HDMI will depend on the specific needs of a gaming setup. While both interfaces can deliver high-quality audio and video signals, the specific requirements of a gaming setup may dictate the use of one interface over the other.

Graphics Card Architecture for Professionals

The Graphics Processing Unit (GPU) is a specialized processor designed to handle the complex mathematical calculations required for rendering images and animations. In the context of graphics cards, the GPU is responsible for processing the data that is sent to the display, transforming it into the visual output that we see on the screen.

For professionals, the GPU is a critical component of the graphics card, as it determines the performance and capabilities of the card. The following are some of the key functions of a GPU for professionals:

- Rasterization: This is the process of transforming the data from the application into a format that can be displayed on the screen. The GPU is responsible for performing this operation efficiently, as it is a time-consuming process that requires a lot of processing power.

- Shading: This refers to the process of applying colors and textures to the rendered image. The GPU is responsible for performing this operation quickly and accurately, as it has a significant impact on the final visual quality of the image.

- Ray Tracing: This is a technique used to simulate the behavior of light in a scene. The GPU is responsible for performing ray tracing calculations, which can be complex and require a lot of processing power.

- Compute: This refers to the ability of the GPU to perform general-purpose computing tasks, such as scientific simulations or financial modeling. The GPU is designed to perform these tasks quickly and efficiently, making it a valuable tool for professionals who need to perform complex calculations.

In addition to these functions, the GPU is also responsible for managing the memory and data flow within the graphics card. This includes tasks such as managing the data sent between the GPU and the CPU, as well as managing the memory used by the GPU itself.

Overall, the GPU is a critical component of the graphics card, and its performance and capabilities have a significant impact on the overall performance of the card. As such, it is important for professionals to understand the role of the GPU in graphics cards, and to choose a card that is well-suited to their needs and requirements.

Functions of VRAM for professionals

Video Random Access Memory (VRAM) is a type of memory used by graphics cards to store and manage the graphical data required for rendering images and videos. For professionals, VRAM serves several essential functions, including:

- Texture Storage: VRAM is used to store the textures and shaders applied to 3D models, which helps improve the overall visual quality of the rendered images.

- Frame Buffer: VRAM acts as a frame buffer, temporarily storing the complete image that is being rendered before it is displayed on the screen. This allows the graphics card to manipulate the image data and apply effects or post-processing filters.

- Geometry Processing: VRAM is used to store the intermediate geometry data generated during the rendering process, such as vertices, edges, and faces. This enables the graphics card to perform complex geometry processing operations efficiently.

Importance of VRAM for professionals

For professionals who work with graphics-intensive applications, VRAM plays a crucial role in ensuring smooth and efficient performance. As the complexity of 3D models and scenes increases, the demand for VRAM also increases. Insufficient VRAM can lead to performance issues, such as slow frame rates, artifacts, or even crashes.

Professionals who work with high-resolution images or videos, such as video editors, graphic designers, or 3D artists, require a significant amount of VRAM to handle the large amounts of data. The more VRAM a graphics card has, the more data it can store and process simultaneously, leading to better performance and higher image quality.

Types of VRAM for professionals

There are two main types of VRAM used by graphics cards: Dynamic Random Access Memory (DRAM) and Static Random Access Memory (SRAM).

- Dynamic Random Access Memory (DRAM): DRAM is the most common type of VRAM used in graphics cards. It is a type of memory that is dynamically allocated and deallocated as needed. DRAM is less expensive than SRAM but has a slower access time, which can lead to lower performance in some cases.

- Static Random Access Memory (SRAM): SRAM is a type of memory that is statically allocated and does not need to be deallocated. It has a faster access time than DRAM, which makes it more suitable for applications that require high-speed performance. However, SRAM is more expensive than DRAM, which makes it less common in graphics cards.

In summary, VRAM is a critical component of graphics cards for professionals, as it plays a crucial role in managing the graphical data required for rendering images and videos. The functions of VRAM include texture storage, frame buffering, and geometry processing. The importance of VRAM lies in its ability to handle the increasing complexity of 3D models and scenes, as well as high-resolution images and videos. There are two main types of VRAM used by graphics cards: DRAM and SRAM, each with its own advantages and disadvantages.

Differences between DVI and HDMI for professionals

DVI (Digital Visual Interface) and HDMI (High-Definition Multimedia Interface) are two popular digital video interfaces used by professionals in the field of graphics. While both interfaces transmit digital video signals, they differ in several aspects.

- Compatibility: DVI is primarily used for digital display devices such as monitors and projectors, while HDMI is designed for a wider range of devices, including TVs, audio systems, and gaming consoles.

- Resolution: DVI can support resolutions up to 4K, while HDMI can support resolutions up to 8K.

- Audio: DVI does not support audio, while HDMI can transmit both audio and video signals.

Importance of DVI and HDMI for professionals

For professionals who work with digital visual content, DVI and HDMI are essential interfaces for transmitting high-quality video signals. DVI is preferred for its high-resolution capabilities and lack of compression, while HDMI is preferred for its versatility and ability to transmit both video and audio signals.

How DVI and HDMI work with graphics cards for professionals

DVI and HDMI interfaces work by transmitting digital video signals from the graphics card to the display device. The graphics card converts the digital video signal into a DVI or HDMI signal, which is then transmitted to the display device through a cable. The display device then converts the DVI or HDMI signal back into a video signal, which is displayed on the screen.

For professionals who require high-quality video signals, it is important to use graphics cards that support DVI or HDMI interfaces. Many graphics cards offer multiple DVI or HDMI ports, allowing professionals to connect multiple display devices to a single graphics card. This can be particularly useful for professionals who work with multiple monitors or need to display high-resolution content on a large screen.

Graphics Card Architecture for Machine Learning

The Graphics Processing Unit (GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. A GPU is used in a wide range of applications, including machine learning.

Functions of a GPU for machine learning

In machine learning, a GPU performs the following functions:

- Parallel processing: GPUs are designed to perform many calculations simultaneously, which makes them well-suited for the parallel processing required for machine learning algorithms.

- Matrix operations: Machine learning algorithms often involve matrix operations, and GPUs are optimized for these types of calculations.

- Convolution operations: Convolutional neural networks (CNNs) are a type of machine learning algorithm that is commonly used in image recognition and other computer vision tasks. GPUs are optimized for the convolution operations required by CNNs.

Importance of a GPU for machine learning

GPUs are important for machine learning because they can significantly speed up the training process for machine learning algorithms. This is particularly important for deep learning algorithms, which can have millions of parameters that need to be trained.

Without a GPU, training a deep learning model could take days or even weeks. With a GPU, the training process can be accelerated by a factor of 10 or more, making it possible to train a deep learning model in a matter of hours or days.

Types of GPUs for machine learning

There are several types of GPUs that are commonly used for machine learning, including:

- Consumer-grade GPUs: These are the types of GPUs that are commonly used in desktop computers and laptops. They are designed for gaming and other graphics-intensive applications, but they can also be used for machine learning.

- Professional-grade GPUs: These are specialized GPUs that are designed for use in data centers and other high-performance computing environments. They are optimized for machine learning workloads and can offer even higher performance than consumer-grade GPUs.

-

AI accelerators: These are specialized GPUs that are designed specifically for machine learning. They are optimized for the types of calculations required by machine learning algorithms and can offer even higher performance than professional-grade GPUs.

-

Functions of VRAM for machine learning

- Data Storage: VRAM acts as a temporary storage space for the large amounts of data required for machine learning tasks. It allows for quick access to data during training and inference processes, reducing the time spent on reading data from the main memory.

- Model Storage: VRAM also stores the trained machine learning models, allowing for quick access and computation. This reduces the time required for loading and processing models, which is crucial for real-time machine learning applications.

- Calculations: VRAM is used to store intermediate calculations and results during the machine learning process. This allows for quick access to these calculations, speeding up the overall process.

- Importance of VRAM for machine learning

- Performance: VRAM plays a crucial role in the performance of machine learning tasks. The more VRAM a graphics card has, the more data it can store, which results in faster data access and improved overall performance.

- Complexity: As machine learning models become more complex, they require more memory to store data and intermediate calculations. VRAM allows for the efficient management of this increased complexity, ensuring that machine learning tasks can be performed effectively.

- Types of VRAM for machine learning

- GDDR6: GDDR6 is a type of VRAM commonly used in graphics cards for machine learning. It offers high bandwidth and low power consumption, making it suitable for machine learning tasks that require large amounts of data to be processed quickly.

- HBM: HBM (High Bandwidth Memory) is another type of VRAM used in graphics cards for machine learning. It offers even higher bandwidth than GDDR6, making it suitable for more complex and demanding machine learning tasks. HBM is typically found in high-end graphics cards designed for professional use.

DVI (Digital Visual Interface) and HDMI (High-Definition Multimedia Interface) are two types of display interfaces commonly used with graphics cards for machine learning. Both interfaces allow the graphics card to transmit data to a display device, such as a monitor or a projector.

Differences between DVI and HDMI for machine learning

While both DVI and HDMI can be used with graphics cards for machine learning, there are some key differences between the two interfaces. DVI is a digital interface that carries only video signals, while HDMI is a digital interface that carries both video and audio signals. This means that HDMI is more versatile than DVI, as it can be used to transmit both visual and audio data to a display device.

Another key difference between DVI and HDMI is the maximum resolution that they can support. DVI can support resolutions up to 4K, while HDMI can support resolutions up to 8K. This means that HDMI is capable of transmitting higher-resolution video than DVI.

Importance of DVI and HDMI for machine learning

DVI and HDMI are important interfaces for machine learning because they allow graphics cards to transmit data to a display device. This is necessary for visualizing machine learning models and evaluating their performance. Without these interfaces, it would be impossible to visualize the output of machine learning models on a display device.

How DVI and HDMI work with graphics cards for machine learning

DVI and HDMI work with graphics cards for machine learning by transmitting video data from the graphics card to a display device. The graphics card sends the video data over the DVI or HDMI interface, which is then received by the display device. The display device then renders the video data on its screen, allowing the user to visualize the output of the machine learning model.

In order to use DVI or HDMI with a graphics card for machine learning, the graphics card must have a DVI or HDMI output. Many modern graphics cards have one or more DVI or HDMI outputs, which can be used to connect the graphics card to a display device. Some graphics cards also have multiple DVI or HDMI outputs, which can be used to connect the graphics card to multiple display devices.

FAQs

1. What is the purpose of a graphics card’s architecture?

A graphics card’s architecture refers to the design and layout of its components, such as the central processing unit (CPU), memory, and input/output (I/O) interfaces. The purpose of a graphics card’s architecture is to provide the processing power necessary to render images and videos, as well as to support advanced features like ray tracing and artificial intelligence.

2. What are the main components of a graphics card’s architecture?

A graphics card’s architecture typically includes a CPU, memory, and I/O interfaces. The CPU is responsible for executing instructions and performing calculations, while the memory is used to store data that is being processed. The I/O interfaces allow the graphics card to communicate with other components in the system, such as the motherboard and display.

3. What are some common graphics card architectures?

Some common graphics card architectures include NVIDIA’s GeForce and AMD’s Radeon. Both of these architectures are designed to provide high levels of performance and support for advanced features like ray tracing and virtual reality. Other less common architectures include Intel’s HD Graphics and Qualcomm’s Adreno.

4. How does the architecture of a graphics card affect its performance?

The architecture of a graphics card can have a significant impact on its performance. A graphics card with a more powerful CPU and more memory will generally be able to handle more demanding tasks and provide better performance. Additionally, certain features like ray tracing and virtual reality require specific hardware components and may only be supported by certain graphics card architectures.

5. Can I upgrade the architecture of my graphics card?

In most cases, it is not possible to upgrade the architecture of a graphics card. This is because the architecture of a graphics card is determined by its design and the components it includes, which are typically not user-serviceable. However, it may be possible to upgrade the memory or other components of a graphics card in some cases, although this will depend on the specific model of the card and the skills of the person performing the upgrade.